Character AI Filters are Ruining the Fun – Here’s What You Can Do About It

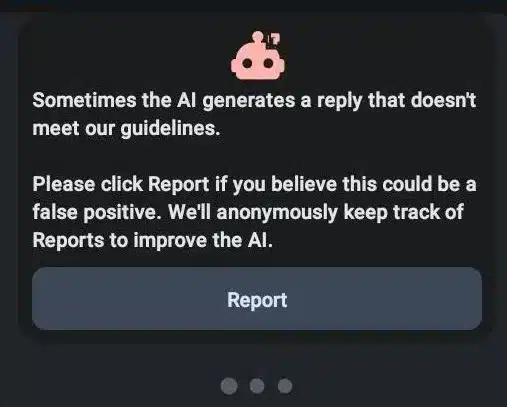

So, you’re trying to have a fun, harmless conversation with your chatbot on Character AI, but you keep getting slapped with this annoying message:

“Sometimes the AI generates a reply that doesn’t meet our guidelines. Please click Report if you believe this could be a false positive. We’ll anonymously keep track of Reports to improve the AI.”

It’s like trying to talk to a brick wall, right?

Well, in this article, I’ll walk you through the wild world of chat filters in Character AI and also help you find some alternatives that won’t make you want to pull your hair out.

We’ll cover:

- What chat filters are and why they exist

- How overly strict filters can ruin your chatbot experience

- The importance of finding a balance between safety and fun

- Two awesome alternatives to Character AI that give you more freedom:

- Tips for navigating conversation filters without losing your mind

- What the future might hold for AI chatbots and content moderation

Sound good? Let’s dive in!

Frustrated with Character AI’s Disappearing Chats and Groups? Try These Solutions

What are chat Filters and Why Do They Exist?

So, chat filters are basically like that overprotective parent who won’t let you do anything fun.

They’re designed to keep you safe from all the nasty stuff floating around in the digital world, like harmful content, explicit material, and all that jazz.

These filters work by scanning your chats for certain keywords or phrases that might be considered inappropriate or dangerous.

If they spot something suspicious, they’ll block the message and give you that annoying “guidelines“ warning.

Now, don’t get me wrong – the intention behind these filters is good. They’re there to protect users, especially younger folks, from being exposed to things they shouldn’t see.

But sometimes, these filters can be a bit too strict, making it hard to have a normal conversation without triggering them.

How Overly Strict Filters Can Ruin Your Chatbot Experience

Imagine this: you’re trying to have a harmless roleplay session with your AI buddy, but every time you type something even slightly suggestive, like “I’m going to bed now,” the filter slaps you with a warning.

Or maybe you’re having a rough day and want to vent to your AI companion about your feelings.

But as soon as you mention anything remotely personal, the filter shuts you down faster than you can say “emotional support.”

It’s frustrating when you can’t even use everyday words or phrases without triggering the filter.

Suddenly, your fun, casual chat turns into a game of “how can I rephrase this to avoid getting flagged?”

Solving Voice Issues in Your Character AI App: A Step-by-Step Guide

Finding a Balance Between Safety and Fun

Look, I get it. Safety is important, especially in the Wild West of the internet. But there’s got to be a middle ground between protecting users and letting them express themselves freely, right?

The problem with overly strict filters is that they can make chatbots feel more like prison guards than friendly companions.

Nobody wants to feel like they’re being constantly monitored and censored when they’re just trying to have a good time.

On the other hand, we can’t just let anything and everything fly in the name of fun. There’s a lot of messed up stuff out there, and we need to make sure vulnerable users aren’t being exposed to it.

So, what’s the solution?

Well, I think Character AI developers need to work on creating filters that are more nuanced and context-aware.

Instead of just blindly flagging keywords, they should be able to understand the intent behind the messages and make more informed decisions about what to allow.

Plus, giving users some control over their own chat experiences couldn’t hurt.

Let them choose their level of filter strictness or opt-in to certain types of content. That way, everyone can tailor their chatbot experience to their own comfort level.

Two Awesome Alternatives to Character AI That Give You More Freedom

If you’re sick and tired of dealing with Character AI’s overzealous filters, there are some great alternatives out there that give you a bit more breathing room.

- Candy AI: This chatbot platform is like the cool older sibling of Character AI. It’s got customizable content filters, so you can adjust the level of strictness to your liking. Want to have a spicy roleplay session? No problem, just crank down the filter. Prefer to keep things PG? Candy AI has got you covered.

- Moemate: If you’re looking for an AI companion that puts you in the driver’s seat, Moemate is the way to go. This platform prioritizes user control and privacy, so you don’t have to worry about your chats being constantly monitored. Plus, Moemate’s filters are designed to be more context-aware, so you’re less likely to get flagged for innocent messages.

Both of these alternatives offer a refreshing change of pace from Character AI’s sometimes suffocating filters.

They give you more freedom to express yourself and have the kinds of conversations you want to have, without sacrificing safety.

Creating a Consistent and Well-Defined Character on Character AI

Tips for handling Filters Without Losing Your Mind

Alright, so let’s say you’re stuck with a chatbot that has super strict filters. What can you do to make your experience a bit less frustrating?

Here are a few tips:

- Read the guidelines: I know, I know – reading terms of service is about as fun as watching paint dry. But if you take a few minutes to skim through the chatbot’s guidelines, you’ll have a better idea of what you can and can’t say to avoid triggering the filters.

- Watch your language: No, I don’t mean you have to start talking like a Victorian aristocrat. Just try to avoid words or phrases that are commonly associated with inappropriate content. If you’re not sure whether something will trigger the filter, try rephrasing it in a more family-friendly way.

- If you see something, say something: Character AI filters are far from perfect. If you think a message was unfairly flagged, most platforms have a way for you to report it as a false positive. The more feedback developers get from users, the better they can fine-tune their filters to be more accurate.

It takes some trial and error to figure out what works and what doesn’t. But with a little patience and creativity, you can still have fun and meaningful chats with your AI pals.

What the Future Might Hold for Character and Content Moderation

As AI technology keeps getting smarter, so too will the ways we moderate content in chatbots.

Character AI developers are already working on more sophisticated filtering techniques that can better understand context and nuance.

In the future, we might see AI-powered content moderation that can automatically detect and flag inappropriate messages, while still allowing for natural, free-flowing conversations.

Imagine a filter that can tell the difference between a harmless joke and a genuinely offensive statement – that’s the kind of smart, discerning moderation we’re moving toward.

But as AI gets better at keeping us safe, let’s not lose sight of the need for user choice and control.

No matter how advanced the filters get, there will always be people who want more freedom to express themselves without restrictions.

Wrapping Up

Well, we’ve covered a lot of ground here. We’ve learned about the ups and downs of conversation filters, explored some alternatives to Character AI, and even peeked into the future of content moderation.

At the end of the day, the world of AI chatbots is a bit like a high-wire act. On one side, you’ve got the need for safety and protection. On the other, you’ve got the desire for freedom and authentic expression. Finding that perfect balance is no easy feat.

But here’s the thing – we’re all in this together. Developers, users, and even the chatbots themselves (well, maybe not them) have a role to play in creating a better, more nuanced system of content moderation.

As users, we can do our part by providing honest feedback, using clear and respectful language, and being patient as the technology evolves.

And who knows – maybe one day we’ll have chatbots that can perfectly navigate the complexities of human conversation without skipping a beat.

Until then, don’t be afraid to experiment with different platforms and find the one that best suits your needs.