OpenAI Explains Why Chatbots Hallucinate

OpenAI just shared a paper that digs into one of the most frustrating flaws in AI systems: hallucinations.

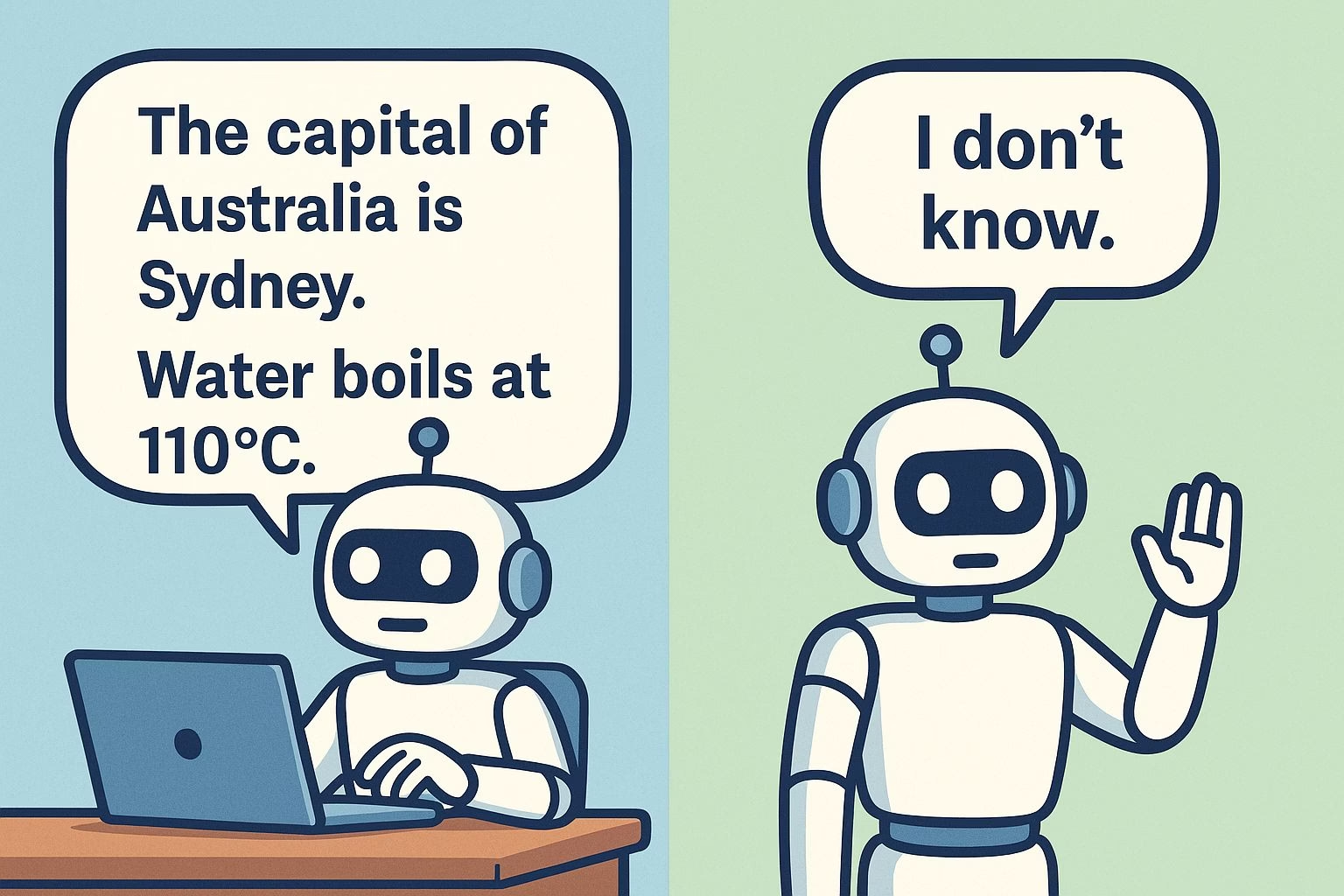

The researchers argue that chatbots don’t make things up because they want to mislead us, but because of how they are trained.

Standard methods reward them for guessing with confidence instead of admitting when they don’t know. That means a chatbot gets top marks for a lucky guess but nothing for saying “I’m not sure.”

Over time, models learn to always produce an answer, even if it is completely wrong.

The study tested this by asking models for things like exact birthdays and dissertation titles. Instead of refusing, the chatbots confidently gave different fake answers every time.

The team suggests flipping the training process to punish confident errors more than admissions of uncertainty.

The shift could lead to systems that are less flashy but more trustworthy, especially in areas where accuracy is non-negotiable.

Key Takeaways:

- Chatbots hallucinate because training rewards confident guessing instead of honest uncertainty.

- Current metrics give full credit for lucky guesses but zero for “I don’t know.”

- This teaches models to bluff rather than admit limits, eroding trust in real-world use.

- Rewarding honesty over confidence would lead to more reliable systems, even if test scores drop.

- Trust will define the future of AI, not inflated benchmark charts.

The problem with grading chatbots like school tests

OpenAI’s paper shows that chatbots aren’t rewarded for honesty. They’re rewarded for bluffing.

Imagine a school test where students get:

-

Full marks for guessing the right answer

-

Zero points for writing “I don’t know”

Over time, students would learn that it’s always better to guess than to admit uncertainty. That is exactly how current AI training works.

When a chatbot is asked “What year was Nelson Mandela born?” it must answer, even if it is unsure.

If it says, “I don’t know,” the system treats it as a failure. If it blurts out “1925” instead of the correct 1918, that’s a confident lie, but it looks better on paper than silence.

Multiply that by billions of training examples, and you end up with models that are trained to hallucinate.

This is not intelligence. It’s a scoring trick that produces machines that look sharp in benchmarks but collapse in the real world.

How honest AI could actually work

The paper suggests punishing confident errors more than honest uncertainty.

To see why this matters, picture two doctors:

-

Doctor A: says “I’m not sure, we need further tests.”

-

Doctor B: says “It’s definitely X” but is often wrong.

Most people would pick Doctor A, because honesty about limits saves lives. Chatbots should be trained under the same logic.

If models could say “I don’t know” without penalty, they would start acting more like assistants we can trust. That doesn’t mean perfection. It means knowing when to stop instead of doubling down on falsehoods.

The tradeoff is obvious: fewer flashy answers, more reliability. For critical tasks like medical advice, financial planning, or even legal assistance, that reliability matters more than showing off.

How AI labs chase numbers instead of trust

The obsession with accuracy scores is driving a lot of this mess. AI labs love to release charts showing how their models hit 90 percent on some benchmark.

What they don’t show is how many of those “right” answers are just lucky guesses.

Think about it: a chatbot that bluffs 100 times and happens to get 90 right looks great in testing. But in real life, those 10 wrong answers could be catastrophic.

If a chatbot “guesses” the wrong dosage of a medication, the cost of that confident error is far greater than losing points on an academic benchmark.

This is why current metrics feel dishonest. They inflate performance numbers while sweeping the real problem, reliability, under the rug. People don’t care if a model gets 90 percent in a lab setting. They care about whether they can trust it in their daily lives.

And right now, that trust is being traded away for bragging rights.

What could change if honesty was rewarded

If AI training shifted to reward honesty, we would see very different systems emerge.

Instead of confidently making up a fake dissertation title, a chatbot might finally respond, “I don’t have enough information to answer that.” That single shift could transform how people use these tools.

-

In education, students could learn from AI that models have limits, instead of confidently copying wrong answers into essays.

-

In healthcare, doctors could use AI as a cautious assistant, knowing it will flag uncertainty instead of delivering fiction as fact.

-

In law, an AI assistant could warn, “This may not be accurate; check legal statutes directly,” instead of fabricating case law.

These kinds of changes would not only build trust but also force companies to rethink their priorities. The market would reward reliability over hype.

And frankly, that’s the direction this industry has to move in if it wants long-term credibility.

People forgive honesty but not lies

Most of us don’t mind when a system says, “I don’t know.” In fact, that response builds confidence because it shows the AI understands its limits.

The frustration comes when chatbots confidently invent information and present it as fact. That is not a small slip; it’s a breach of trust.

Think of a GPS app. If it tells you “no route found,” you adjust and find another way. If it sends you confidently into a dead end, you stop trusting it altogether.

Chatbots work the same way. Once users see too many false statements given with absolute certainty, they abandon the tool. The difference between silence and a confident lie is the difference between a tool you can work around and one you cannot rely on at all.

This is where OpenAI’s paper actually lands hard. It shows that hallucinations are not just a quirk of AI but a direct result of how companies have chosen to build them.

That choice can be changed.

The road ahead for reliable AI

The fix is simple in theory: stop rewarding machines for gambling with answers. Start rewarding them for honesty, even if that honesty lowers their test scores.

The harder part is convincing companies to give up the short-term PR boost of higher accuracy charts in favor of the slower work of building trust.

If labs take this path seriously, the future of AI looks much more stable. We could finally get systems that are more cautious, more transparent, and more aligned with how people actually use them.

But if companies ignore this and keep prioritizing benchmarks over trust, then hallucinations will remain baked into the fabric of every chatbot we use.

At RoboRhythms.com, we’ve seen how quickly users lose patience with AI tools that can’t be trusted. The industry doesn’t need smarter liars. It needs assistants that know when to stop talking.

And until that shift happens, hallucinations will keep being the Achilles’ heel of AI.