Understanding OpenAI’s ChatGPT Content Policies

Have you ever felt like you’re navigating a maze when trying to understand the content policies of AI tools like ChatGPT?

Well, you’re not alone. As an affiliate marketing expert, I’ve seen how crucial it is to grasp these policies, not just for compliance but for harnessing the full potential of AI in our strategies.

In this article, we’ll unravel the ChatGPT content policy, ensuring you’re well-equipped to use this powerful tool effectively and responsibly.

Why should you care about these policies?

For starters, they’re the guardrails that keep your AI journey on track, preventing mishaps and ensuring your use of ChatGPT aligns with legal and ethical standards. Whether you’re a content creator, marketer, or just an AI enthusiast, understanding these policies is key to avoiding pitfalls and maximizing the benefits of ChatGPT.

In this comprehensive guide, we’ll dissect each policy, explaining its implications for you as a user. From the no-nos like illegal activities and hate speech to more nuanced areas like political campaigning and privacy concerns, we’ll cover it all.

By the end of this read, you’ll have a clear roadmap of what’s allowed, what’s frowned upon, and how to navigate the sometimes murky waters of AI content policies.

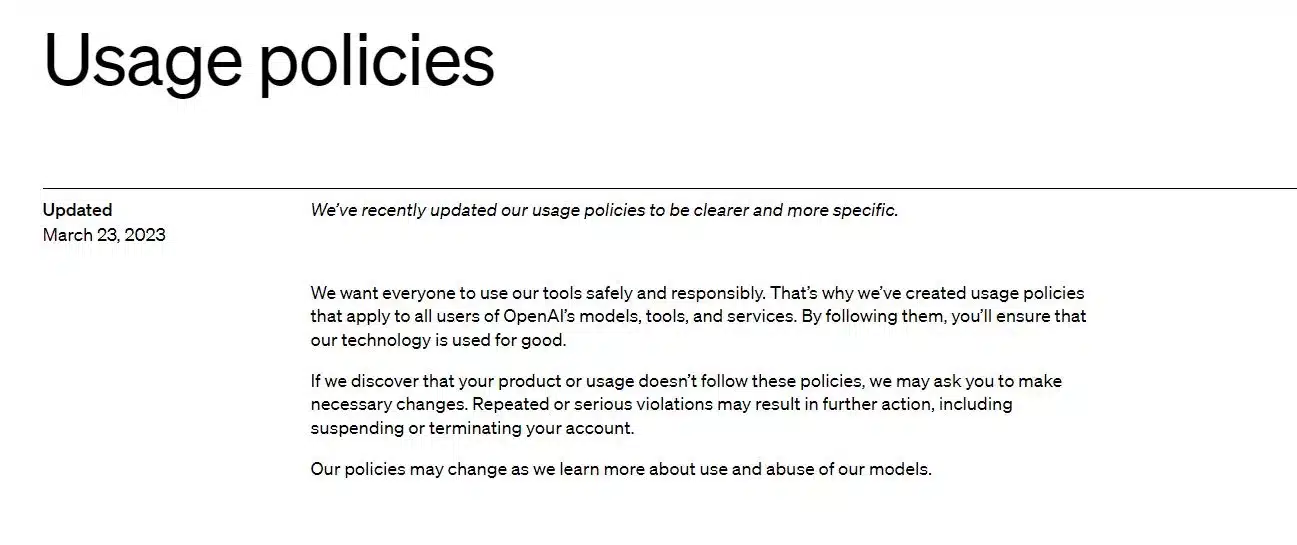

General Principles of OpenAI’s Usage Policies

Let’s kick things off with the bedrock of ChatGPT’s content policies – the general principles. These are the cornerstone of responsible AI usage, setting the stage for everything else.

In this section, we’ll explore the overarching goals of these policies and what they mean for you as a user.

Understanding these principles is like having a compass in the world of AI. They guide your actions, ensuring you’re using ChatGPT in a way that’s safe, legal, and ethical.

We’ll delve into the importance of these guidelines, the rationale behind them, and the consequences of not playing by the rules.

This section isn’t just about dos and don’ts; it’s about understanding the ‘why’ behind the policies. It’s about aligning your use of ChatGPT with the broader vision of ethical AI, ensuring your actions contribute to a positive and responsible AI ecosystem.

At the heart of these guidelines is the commitment to safe and ethical AI use. OpenAI emphasizes the importance of using ChatGPT in ways that are legal and beneficial to society.

This means steering clear of activities that could cause harm, whether physical, psychological, or digital. It’s about using this powerful tool to uplift, not to tear down.

For marketers like us, these principles are particularly pertinent. They remind us that our creative endeavors with AI should always be tinted with the colors of responsibility and integrity.

Whether crafting a compelling blog post or devising a new marketing strategy, these guidelines ensure our AI usage is not only innovative but also conscientious.

Prohibited Uses of OpenAI Models

The line between innovative use and misuse of AI can sometimes blur. OpenAI’s content policies draw this line clearly, outlining what constitutes unacceptable use of their models, including ChatGPT.

This section is the rulebook, detailing the activities that are off-limits.

Illegal activities are a clear no-go. Using ChatGPT to engage in or promote unlawful acts is strictly against OpenAI’s policies.

This includes anything from generating content that supports criminal activities to using the AI for fraudulent schemes.

The message is clear:

creativity should never cross the line into illegality.

Another critical area is the generation of harmful content. This includes hate speech, harassment, and content that glorifies violence.

In a world where words can be as impactful as actions, ensuring that AI-generated content does not contribute to societal harm is paramount.

As users, we must be vigilant, ensuring that our use of ChatGPT does not inadvertently or intentionally create content that could harm individuals or groups.

For those of us in the marketing field, these restrictions underscore the importance of ethical messaging. It’s a reminder that our quest for engaging content must always be balanced with a commitment to respect and dignity for all.

High-Risk Activities and Content

When it comes to AI, not all waters are safe to navigate. OpenAI’s content policies specifically highlight high-risk activities and content areas where the stakes are particularly high.

Understanding these is crucial for users to avoid inadvertently steering into dangerous territory.

One such high-risk area involves activities that could lead to physical harm. This includes using ChatGPT for developing weapons, military applications, or managing critical infrastructure.

For instance, a seemingly innocent query about creating a drone could cross into prohibited territory if it’s linked to military use.

The consequences?

Potential legal action and a ban from using OpenAI’s services.

Economic harm is another critical area.

This includes using ChatGPT for schemes like multi-level marketing, gambling, or fraudulent activities.

Imagine using the AI to generate persuasive content for a pyramid scheme. Not only does this violate OpenAI’s policies, but it also risks legal repercussions and damages one’s reputation.

Privacy violations form another cornerstone of high-risk activities.

Using ChatGPT to track or monitor individuals without consent, or to gather personal data in unauthorized ways, is a direct violation of these policies.

For marketers, this means being extra cautious about how data is sourced and used in AI-driven campaigns.

Industry-Specific Restrictions

Certain industries come with their own set of rules in the realm of AI.

OpenAI’s content policies lay out specific restrictions for fields like:

- medical,

- financial,

- and legal advice.

This is where the line between general information and professional advice becomes crucial.

For instance, using ChatGPT to generate medical advice could lead to serious health risks and legal issues.

A user asking for AI-generated tips to treat a medical condition might receive general information, but anything resembling professional advice is off-limits.

The implications?

Misguided medical advice could lead to health hazards and legal liabilities.

In the financial and legal domains, the stakes are equally high.

Generating investment tips or legal advice without proper qualifications can mislead individuals, leading to financial loss or legal complications.

As a marketer, it’s vital to ensure that any content in these areas is clearly framed as general information and not professional advice.

Special Requirements for Certain Uses

The world of AI is not just about what you can’t do; it’s also about understanding the nuances of responsible usage.

OpenAI’s content policies include special requirements for certain applications, particularly those that are consumer-facing or involve automated systems.

For consumer-facing applications, transparency is key.

If you’re using ChatGPT to power a customer service chatbot, for instance, it’s essential to disclose that the responses are AI-generated.

This isn’t just about adhering to policy; it’s about building trust with your audience. Failure to disclose AI involvement can lead to a breach of trust and potential backlash.

Automated systems, especially those involving conversational AI, have their own set of requirements.

These systems must be designed to avoid deception and misuse.

For example, creating a ChatGPT-powered bot that impersonates a human for phishing purposes would not only be a gross violation of these policies but could also lead to legal consequences.

Platform and Plugin Policies

For those of us integrating ChatGPT into platforms or developing plugins, OpenAI’s policies provide a roadmap for compliant development.

These guidelines are crucial for ensuring that the integration of AI into platforms and plugins aligns with ethical standards and legal requirements.

API integration, for instance, must be done in a way that respects the limitations and intended use of the AI.

Misusing the API to bypass content restrictions or to enable prohibited activities can lead to suspension of API access and legal ramifications.

Plugin development also comes with its own set of rules. Developers must ensure that their plugins do not facilitate or encourage policy violations.

For example, creating a plugin that helps users bypass content filters would be a direct violation of these policies, risking access to OpenAI’s services and potential legal action.

Policy Evolution and Monitoring

The landscape of AI is ever-evolving, and so are the policies governing its use.

OpenAI’s commitment to adapting its content policies in response to new developments and challenges is a critical aspect for users to understand.

This adaptability ensures that the guidelines remain relevant and effective in a rapidly changing technological world.

For users, staying abreast of these changes is essential. A policy that’s relevant today might evolve tomorrow, and keeping up-to-date ensures that your use of ChatGPT remains compliant.

For instance, as AI becomes more capable, policies around deepfakes or synthetic media might tighten, requiring users to adjust their practices accordingly.

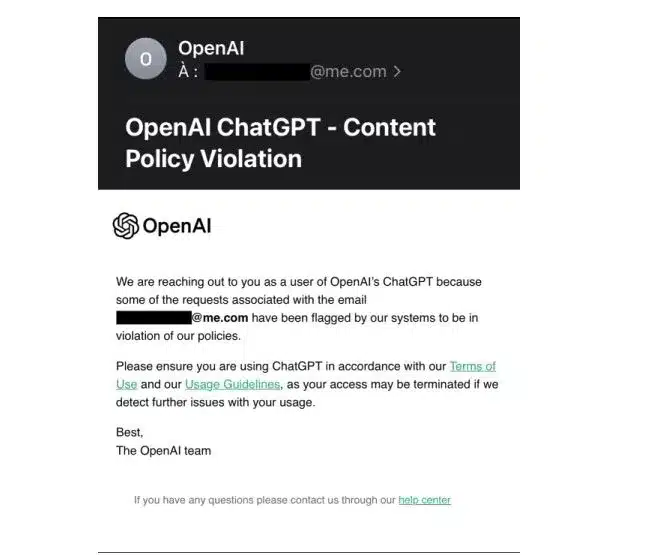

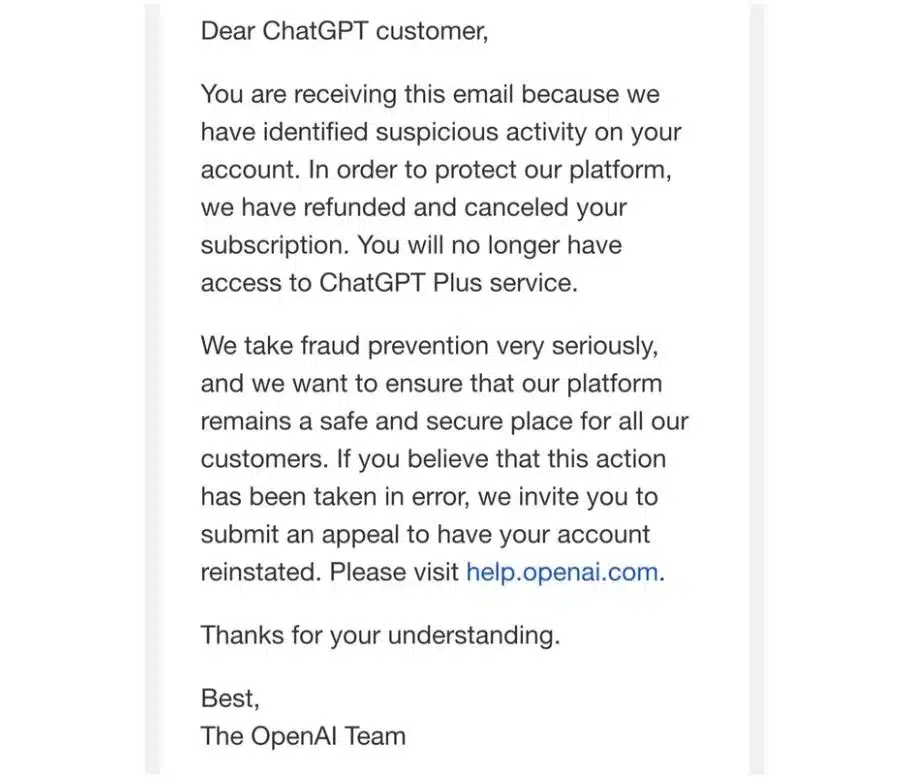

OpenAI also employs various methods to monitor compliance with its policies. This includes automated systems and manual reviews.

As a user, being aware of this monitoring helps reinforce the importance of adhering to the guidelines. Violations, whether intentional or accidental, can lead to consequences ranging from warnings to permanent bans.

Implications for Users and Developers

Understanding OpenAI’s content policies is not just about avoiding penalties; it’s about harnessing the power of ChatGPT responsibly and effectively.

For users and developers, these policies provide a framework for ethical and legal AI use, ensuring that their innovations and interactions with AI are both productive and positive.

For marketers and content creators, these policies guide how to leverage AI for creative endeavors without overstepping ethical boundaries.

They serve as a reminder that while AI can be a powerful tool for engagement and innovation, its use must be balanced with responsibility and respect for legal and ethical standards.

Developers integrating ChatGPT into their platforms or creating plugins must also navigate these policies carefully.

They play a crucial role in shaping how AI is used and perceived by the public.

Adhering to these guidelines ensures that their contributions to the AI ecosystem are positive, responsible, and in line with societal values.

FAQs

What are the key prohibited uses of ChatGPT according to OpenAI’s policies?

The key prohibited uses include engaging in illegal activities, generating content that exploits or harms children, creating hate speech or violent content, and using ChatGPT for malware generation.

How do OpenAI’s content policies impact high-risk activities?

The policies restrict the use of ChatGPT in high-risk activities such as developing weapons, military applications, managing critical infrastructure, engaging in economic harm activities like fraud, and violating privacy laws.

Are there specific industry restrictions for using ChatGPT?

Yes, OpenAI’s policies restrict the use of ChatGPT for providing tailored advice in medical, financial, and legal fields, and for political campaigning and lobbying.

What are the special requirements for consumer-facing applications using ChatGPT?

For consumer-facing applications, it’s essential to disclose that responses are AI-generated. This ensures transparency and builds trust with the audience.

How does OpenAI monitor compliance with its content policies?

OpenAI uses a combination of automated systems and manual reviews to monitor compliance. Users need to adhere to these policies to avoid penalties like warnings or bans.

Can OpenAI’s content policies change over time?

Yes, OpenAI’s content policies can evolve in response to technological advancements and societal changes. Users should stay updated to ensure ongoing compliance.

What are the implications for developers integrating ChatGPT into platforms or plugins?

Developers must ensure their integrations and plugins do not facilitate policy violations. They play a crucial role in responsible AI usage and must adhere to ethical and legal standards.