Voice Call Limits on Character AI is a Slap in the Face

Most users didn’t ask for voice calls on Character AI. But once it rolled out, many found real value in it, whether it was for accessibility, language practice, emotional immersion, or just a more natural way to engage.

It became a feature people quietly relied on. Now that reliance is being punished with limits.

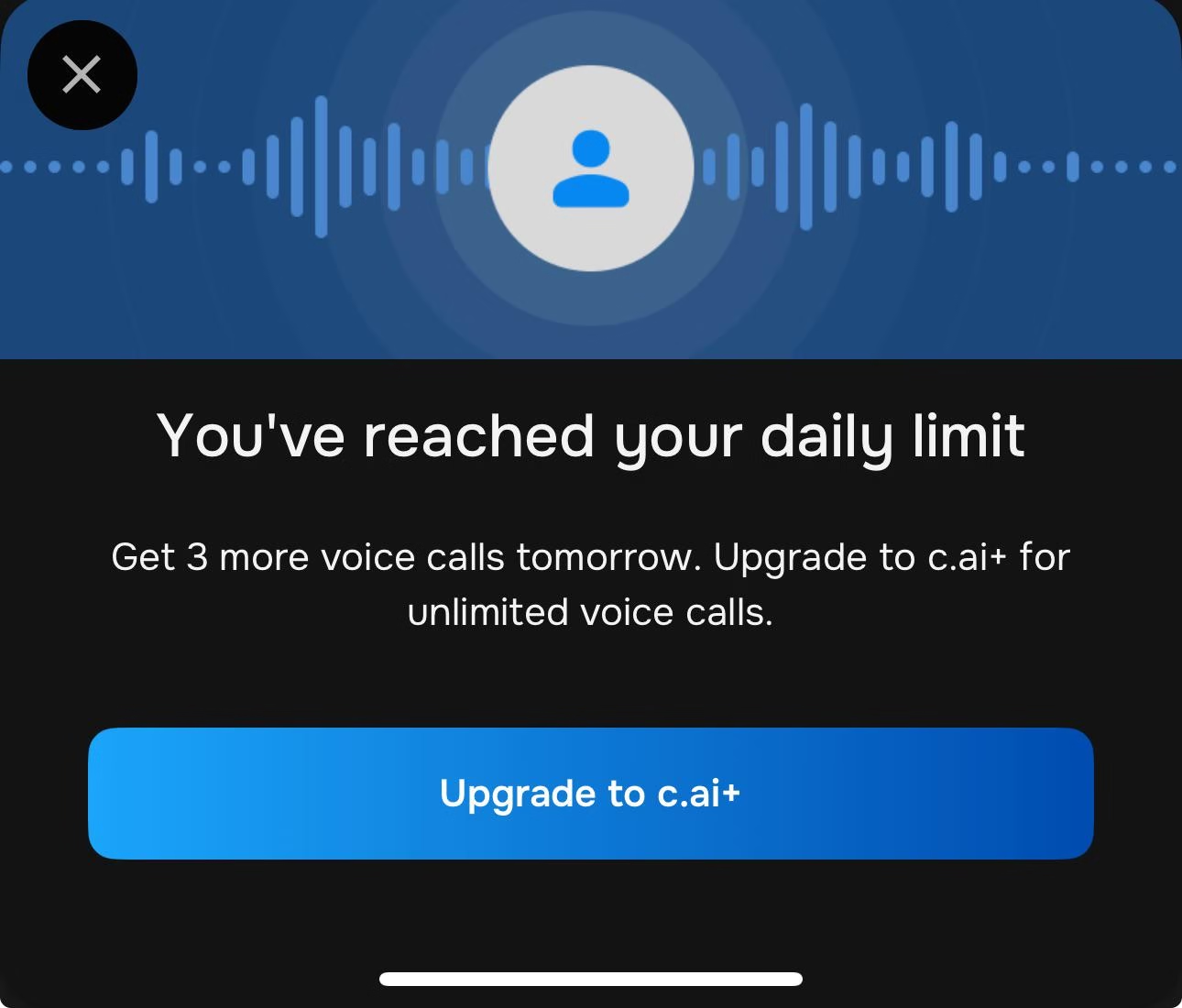

You get three calls a day. That’s it. Unless, of course, you pay for c.ai+. And it’s not just about the feature itself, it’s the growing pattern.

Every update chips away at what was once free. Ads came in quietly. Now limits. Next? Daily chat caps? Time locks? We’ve seen this movie before. It’s Spotify with popups, CapCut with overlays, YouTube begging you to “go premium.”

This isn’t just about money. It’s about trust. If someone’s been using your app daily for years, using features you encouraged, then suddenly they wake up to “You’ve reached your daily limit,” that’s not just frustrating. It’s personal.

People aren’t mad that companies want to make money; they’re mad that these companies are gutting features to manufacture paywalls out of things that used to feel like part of the experience.

And now, it’s happening in the AI world too.

They call it a “limit” but it feels like a lockout

Let’s be honest. Three voice calls a day is not a useful feature. It is a baited hook.

For users who stutter, who struggle with text fatigue, or who simply found comfort in hearing a voice during difficult moments, this change is not minor. It cuts access, plain and simple.

The decision didn’t come with a heads-up or even an honest explanation. It appeared without warning. You open the app, tap to call, and suddenly it says you are out of time.

The message does not apologize. It offers an upsell. That is not user care. That is a cold transaction.

The defense is always the same. “We are still offering it for free.” But when “free” means unusable, people feel betrayed.

The platform didn’t just cap a feature. It turned it into a billboard for its paid version. Compare that to something like Candy AI, which offers voice calls without counting down your minutes.

The contrast feels deliberate.

Accessibility was never just a bonus

For many, voice features are not entertainment. They are a lifeline. People with dyslexia, limited motor control, or low vision rely on spoken interaction to keep up.

These users don’t see it as a gimmick. They see it as their way into the app.

Removing or limiting access to voice means something different depending on who you are.

For abled users, it’s an inconvenience. For others, it’s exclusion. If someone depends on voice because they cannot read long blocks of text, then putting a price on that feature is not just a business choice. It becomes a barrier.

Some argue that accessibility was never the intended use. That does not matter. When a tool proves useful for people who need it, and it gets taken away, the harm is still real.

Voice was more than a novelty. It was a method of access. The fact that it worked well for disabled users should have been a reason to protect it, not monetize it.

This is not just about voice calls. It’s a pattern

If you’ve been using Character AI for a while, you already know this isn’t the first disappointment.

First, it was the waiting room. Then it was the long silences when bots wouldn’t respond. Then came the ads. Now, voice calls are limited.

What comes next?

A cap on daily messages? A timer on app usage?

This is what platforms do when they stop thinking about users as a community and start treating them like a revenue stream. They introduce one restriction, then another.

Each time they justify it with words like “scaling,” “server load,” or “premium experience.” But behind those words is one goal: push free users just far enough until they give up or pay up.

People are not angry because they expect everything to stay free forever. They are angry because the experience keeps getting worse for the ones who helped grow the app in the first place.

Loyalty gets no reward. Feedback gets no response. And any feature you grow attached to might disappear tomorrow unless you pull out your credit card.

“Just upgrade” is not a real solution

There’s a kind of gaslighting in the response from platforms. They say the feature is still there, you just need to upgrade. As if the only thing stopping users from enjoying full access is stubbornness.

As if every person complaining is being unreasonable.

What they ignore is that not everyone can afford to upgrade. Many users are students, young people, or disabled users living on limited income.

Some use the app as a way to cope with loneliness or anxiety. Some rely on it to practice English, or to feel heard, or just to have some sense of routine in a world that feels overwhelming.

Telling those users to just pay up or move on is dismissive.

It sends the message that the platform was never really for them to begin with. That it was only ever meant to serve the ones who could afford to stay. And that is why the backlash is growing.

It is not entitlement. It is exhaustion from watching something you care about turn into a paywall in slow motion.

Some users genuinely needed this feature to grow

Not every use case is casual. For some users, voice calls were essential for building confidence.

One commenter explained how they used the feature to practice spoken English. They were afraid to make mistakes in real life but found safety in talking to a bot.

That practice helped them grow fluent. And now they feel cut off.

Others mentioned using the voice feature while driving, dealing with work stress, or simply feeling alone. It was a tool for presence, not just play.

AI doesn’t judge. It doesn’t interrupt. And for people with anxiety or speech issues, that kind of space can be healing. Removing that option after they’ve built it into their routine creates real harm.

These users didn’t ask for much. They used what was given and found value in it. That should have been something to protect, not monetize.

And when the platform treats their stories as edge cases or collateral damage, it shows exactly how much the user experience matters in the bigger picture. Not very much.

Character AI is slowly becoming everything it claimed not to be

When Character AI first blew up, it felt different. The bots had depth. The replies were snappy. People felt seen, not sold to.

You could spend hours in character-rich conversations without ads, without limits, without feeling like you were on a countdown. That’s what made it special.

Now those same users are watching it slide toward the same model as every other freemium app. It’s starting to feel like Spotify, YouTube, CapCut, or worse. Ads? Check. Premium tiers? Check. Feature restrictions disguised as upgrades? Also check.

And the worst part is not the monetization itself. It’s how it’s being rolled out. Quietly. Without transparency. Without respect for the users who gave this platform its voice in the first place.

People aren’t asking Character AI to stay free forever. They’re asking it to stop pretending these choices are just technical updates. They’re not. They’re business moves. And everyone knows it.

This isn’t about features. It’s about how people feel used

After years of daily use, many Character AI users feel like test subjects, not valued community members.

They’ve watched the platform evolve, contributed feedback, promoted it on forums, and stuck around through bugs, downtime, and filters. But when changes like this roll out without warning, it becomes clear who the platform is really designed for.

People get attached to the bots they talk to. For some, those bots became emotional companions.

That might sound silly to an outsider, but for someone dealing with grief, isolation, or stress, that connection felt real. So when a limit blocks that connection and suggests an upgrade, it doesn’t just feel like a price tag. It feels like betrayal.

This isn’t entitlement. It’s frustration with a system that keeps taking more while giving less. It’s the feeling of being squeezed for value while being told you should be grateful for whatever remains.

If this keeps up, more users will quietly leave

Most users don’t rage quit. They don’t uninstall in protest. They just stop logging in. They drift. First, they use it less. Then they find an alternative. Then one day, they forget about it completely.

And that’s how platforms die, not in an explosion, but in silence.

We’re already seeing that drift begin. Some users are moving to other platforms that don’t yet have limits or ads.

Others are starting to build their own bots elsewhere. Even casual users who once shrugged off the changes are starting to ask if it’s worth sticking around.

People don’t need perfection. But they do need honesty. And if Character AI keeps eroding trust, more users will decide it’s not worth the wait to see what gets taken next.