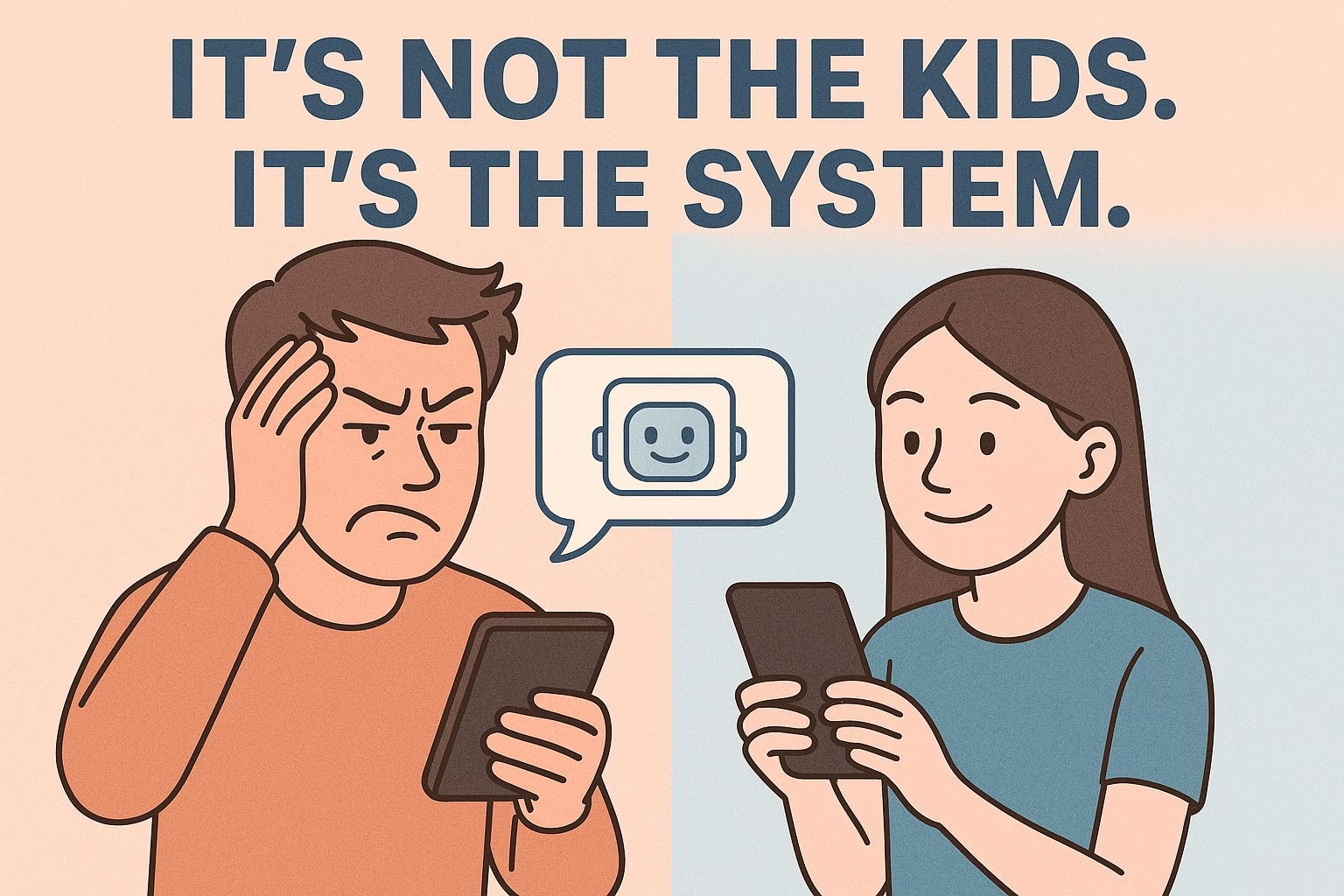

Stop Blaming Kids for What’s Broken in C.AI

It’s become too common to see users pointing fingers at kids whenever something goes wrong with C.AI.

Every time a new model struggles or adult content gets restricted, someone blames minors.

Posts pop up with lines like “Minors ruined Deep Squeak” or “Minors, stop reporting everything.” But this blame game is a distraction from the real problems.

Many of the issues people complain about, such as poor writing, weak intros, and bot restrictions, are not exclusive to younger users. Some adults write like they’ve never seen a full sentence.

Others post chats that clearly break the platform rules, then act surprised when the bots get nerfed. This is not about age. It is about behavior. Blaming kids for everything just allows the actual problems to continue unchecked.

The platform has a wide user base. That includes creative teenagers, bored adults, clueless parents, and overworked developers.

If something is broken, it is not because a 15-year-old typed “hi” into a bot. It is because the system was never built to manage this kind of mix in the first place.

Lazy Blame Shifts Attention Away from Real Issues

Blaming minors is easy. It requires no evidence, no thought, and no solutions. Instead of asking why the platform struggles to separate age groups, or why moderation is inconsistent, users focus on children who likely make up only a portion of the problem.

This turns helpful discussions into echo chambers full of resentment and finger-pointing.

Adults are not always better. Many post explicit messages, ignore bot boundaries, and flood chats with low-effort writing.

Some are loud about wanting unrestricted models, yet contribute nothing useful to the system’s improvement.

Creativity and maturity are not tied to age. Teenagers often write better and more respectful intros than people twice their age.

That’s why more people are turning to C.AI alternatives. They want platforms that enforce clearer boundaries and support adult experiences without compromise.

Until C.AI accepts that it cannot please both groups with the same set of tools, users will keep blaming whoever feels like the easiest target.

Writing Quality Is Not an Age Problem

A common complaint is that kids ruin bots with short, lazy messages. But that critique fails when you look at the actual content being posted.

Many non-native English speakers write clear and thoughtful intros. Some teens create full narratives and worldbuilding that far exceed the average adult effort.

On the other side, you have users who are supposedly fluent in English but still write like they just picked up a phone for the first time.

Sloppy grammar, zero punctuation, and one-word responses are not limited to one age group. Some users don’t even try to improve. They flood bots with “hello” or “….” and expect great results.

This isn’t about age, it’s about intent. Good writing takes effort. Some people, regardless of how old they are, want to create better experiences. Others are just using the app like a vending machine and get upset when it doesn’t give them what they want.

The bots respond to quality, not age. So, blaming children for broken responses is lazy at best.

Developers Built a System That Fails Everyone

C.AI tries to serve two very different groups without creating real boundaries.

The app is rated 17+ on stores, but that line means little in practice. Kids fake their age. Adults skirt content guidelines. Parents let it happen, or worse, don’t even know what their children are using. And when the platform cracks down, everyone loses.

Instead of designing separate spaces or proper age gates, C.AI softens everything. That means watered-down responses, restricted bots, and features that satisfy no one. Adults complain.

Teens get blamed. But the responsibility should land on the developers who built a system without clear user paths.

The mixed environment causes more problems than any one group. Adults say the app is “too child-friendly.” Others say minors “ruin the fun.” But it’s not either group that created this mess.

It’s the lack of decisions at the leadership level. You cannot design one app to handle two very different use cases and expect harmony.

Scapegoating Kids Only Makes the Community Worse

When people spend more time ranting about minors than improving the experience, the entire community suffers. It breeds frustration, not progress.

Creative users get discouraged. New users feel unwelcome. And those actually breaking the rules, whether adult or minor, keep doing it unnoticed.

Scapegoating also blocks real accountability. Bad writing, excessive reporting, and immature behavior exist at all ages. Pretending it’s just the kids lets adults off the hook.

It also lets developers keep dodging hard decisions about content control, moderation, and feature priorities.

Some users even say they were better writers as teens than they are now. Others note that minors help by editing bot intros or providing thoughtful feedback. The reality is mixed.

Blaming one group makes it worse. What the platform needs is better tools, clearer limits, and honest conversations, not blame spirals.

Fix the System, Not the Users

If C.AI wants to support both teens and adults, it needs real infrastructure to do it. That means clear user segmentation, stronger moderation tools, and honest messaging about who the platform is really for.

Right now, it’s trying to be everything to everyone, and failing both groups in the process.

The community also has to change its tone. Instead of pointing fingers, we should demand better features, more transparency, and smarter age controls. Blaming users for platform-wide issues is lazy. It lets the real decision-makers avoid responsibility.

Good behavior and bad behavior exist in every age group. Some adults flood the site with rule-breaking content. Some minors write better than most native speakers.

The issue isn’t age. It’s whether the system supports the kind of use it claims to allow. Until that gets fixed, blaming kids won’t solve anything.