Sora 2 breaks the internet with new AI social video app

Sora 2 key takeaways

- Sora 2 adds synchronized audio and dialogue with stronger physics and more natural scene changes.

- Clips run about 5–10 seconds, which supports smoother pacing and short stories without extra editing.

- The new Sora app centers on Cameos so you can insert your likeness into AI videos and remix trends inside one feed.

- Launch scope: free tier with usage limits in the U.S. and Canada; Pro offers higher access; API is on the roadmap.

Why this matters

- Creation and distribution live in one place, which can speed up viral formats and collaboration.

- Audio-visual sync makes meme clips, sketches, and short narratives easier for solo creators.

Risks to watch

- Impersonation through Cameos and confusion between real and synthetic voices.

- Privacy concerns around likeness capture and storage.

- Content “slop” that can lower feed quality if remixing overwhelms original work.

What to do next

- Test short, character-driven scenes that use dialogue and on-screen actions.

- Create a safe-use plan for Cameos: consent, labeling, and takedown steps.

- Pilot a weekly format inside the app, then cross-post winners to other channels.

OpenAI just rolled out Sora 2, and it already feels like a turning point for AI video.

The model doesn’t just push visuals further; it now pairs them with synchronized audio and dialogue.

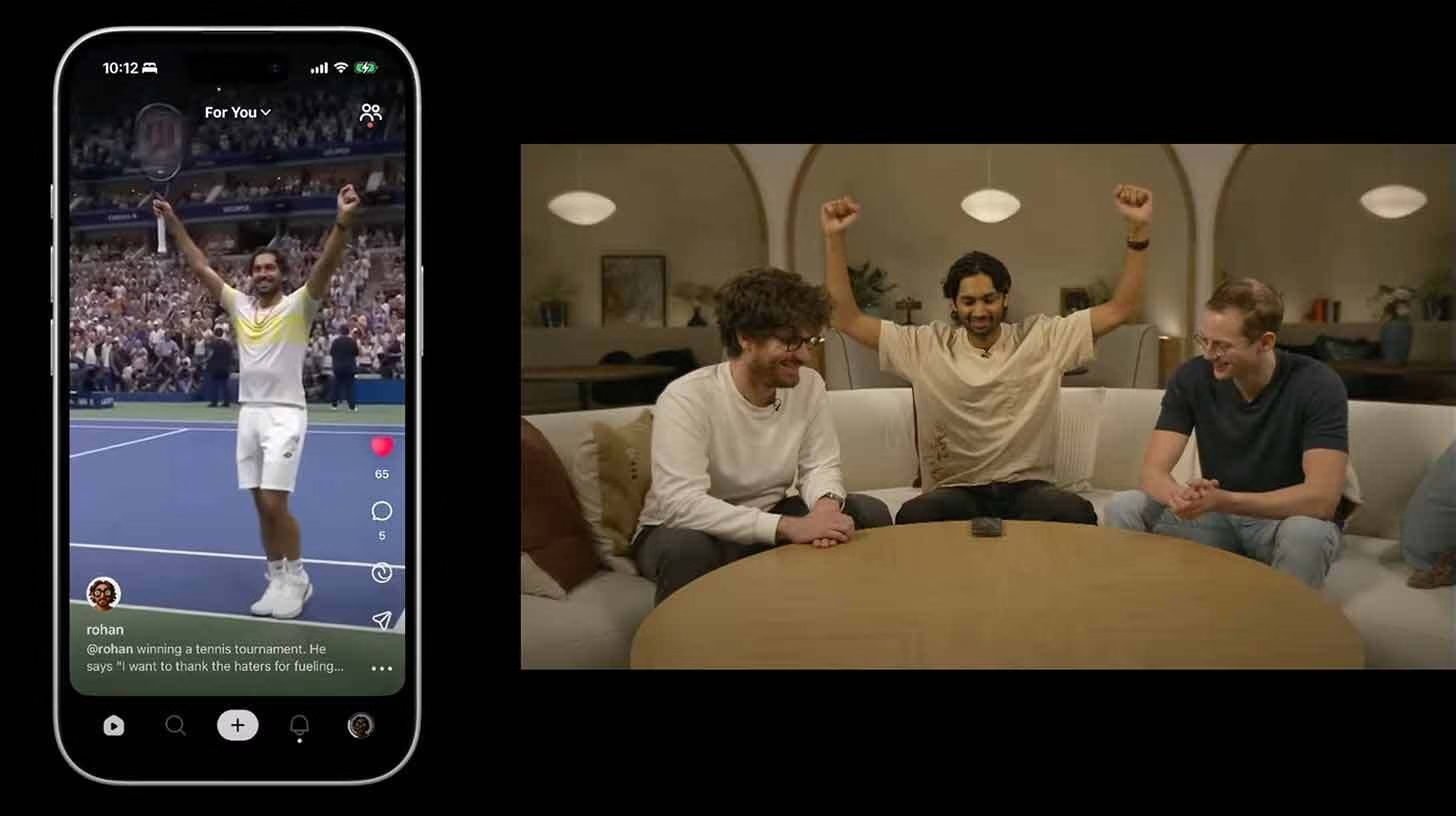

On top of that, OpenAI launched a new Sora app where people can remix clips, add their likeness, and even appear in AI-generated scenes through a feature called Cameos.

The improvements are hard to miss. Sora 2 generates longer clips of 5–10 seconds that handle scene changes more naturally. The physics look far smoother compared to earlier versions.

Dialogue and sound effects stay in sync with what’s happening on screen, making the results more believable than what we’ve seen before.

The social app is just as bold. Cameos lets you record yourself once and then drop that likeness into endless AI-made videos. That setup has the potential to fuel viral trends fast, though it also raises questions about novelty fatigue.

For now, the app is free in the U.S. and Canada with usage limits, while Pro subscribers get extended access and Sora 2 Pro performance. An API is also on the way, which could make this model part of bigger creative pipelines.

What stands out is how quickly OpenAI is experimenting with not just models but also distribution. A video tool tied to a social app changes how people interact with AI output.

Instead of just generating clips, users remix, react, and spread them in a built-in ecosystem.

That shift might matter more than the technical update itself, because it places AI video right in the center of online culture.

How does Sora 2 improve on the first version

The first release of Sora was impressive for short, physics-aware clips, but it had limits. Animations sometimes broke when objects interacted, and longer scenes often felt stitched together.

Sora 2 addresses these issues directly. It produces clips up to 10 seconds, which allows for more natural pacing and story flow. Scene transitions no longer feel abrupt, and motion carries across cuts more smoothly.

Another major leap is in physics modeling. Characters can run, fall, or interact with objects without breaking immersion.

Small details, like shadows or clothing folds, hold together as action unfolds. This is where users notice the difference most, since real-world dynamics are often the hardest to fake.

The addition of synchronized audio marks a turning point. Instead of silent videos, Sora 2 can generate dialogue, sound effects, and ambient noise that match the visuals.

A street scene might include footsteps, cars passing, and background chatter, all tied to what happens on screen.

That kind of alignment makes clips easier to use in storytelling, memes, or short creative projects without outside editing.

For creators, these improvements mean less post-production and more direct output.

Where the first Sora was a test bed, Sora 2 feels like a tool ready for wider creative use.

What the new Sora app means for creators

The launch of the Sora app adds a social layer that wasn’t there before. Instead of downloading clips to share on third-party platforms, users now have a built-in hub.

Videos can be created, remixed, and spread inside the same ecosystem. That design mirrors what TikTok or Snapchat did for short-form video, but with AI as the engine behind every post.

The highlight is the Cameos feature. With one recording, people can insert their likeness into generated videos.

That creates endless opportunities for playful content: appearing in different movie scenes, joining fictional characters, or reacting to absurd scenarios. It lowers the barrier for personal storytelling since no advanced editing is required.

For creators who thrive on trends, Cameos might spark a wave of viral challenges. The novelty of seeing yourself dropped into surreal AI clips has clear appeal.

Yet the question remains whether that excitement lasts, or if the platform risks becoming another short-lived experiment.

The free tier in the U.S. and Canada makes it accessible enough to test, while Pro subscribers get deeper access to Sora 2 Pro for more complex outputs.

If the API arrives soon, we’ll likely see developers fold Sora 2 into other creative tools.

That step could expand its reach far beyond the app itself, giving artists and startups new building blocks for content.

What risks come with Sora 2 and its social app

The most obvious risk is misuse of Cameos. Letting users insert their likeness into AI videos is fun, but it also opens the door for impersonation.

People might find their face appearing in clips they never approved. OpenAI has added usage limits and policy safeguards, but the viral nature of social content makes it hard to control once videos spread.

There’s also the question of content quality. AI-generated clips can lean into surreal or uncanny results, which might work for memes but not for serious storytelling.

If the platform becomes saturated with low-effort remixes, users could lose interest quickly. That’s the “slop-factor” problem: a flood of shallow content that drowns out the most creative work.

Privacy and data concerns shouldn’t be overlooked. To use Cameos, people must provide a recording of themselves.

While OpenAI has privacy policies in place, handing over biometric data always raises questions about storage, future training use, and potential leaks.

For many creators, this could be the biggest barrier to adopting the app.

Finally, there’s cultural risk. AI-generated dialogue and audio synced with visuals blur the line between authentic and synthetic voices. Without clear labeling, viewers might struggle to know what’s real.

This could fuel misinformation, especially if the model scales through the API and third-party integrations.

Could Sora 2 reshape online culture

Sora 2 arrives at a moment when short-form video dominates attention spans. Platforms like TikTok and Instagram Reels have shown how quickly trends can spread, and OpenAI seems to be leaning into that model.

By combining video generation with a built-in app, it shifts AI from being just a tool to being part of the cultural pipeline itself.

The Cameos feature, in particular, plays into identity and performance online. Users have always enjoyed filters, avatars, and virtual costumes.

Dropping yourself into an AI-generated movie scene is just the next step. If enough people adopt it, Cameos could become a new meme format that spreads beyond the app and onto other platforms.

There’s also potential for creators who want to tell stories at scale. With synchronized audio and visuals, a single person can produce short sketches or narrative clips without a crew.

That lowers the barrier for independent voices, especially younger creators who are comfortable blending personal identity with digital performance.

At the same time, the risk of burnout is real. Novelty drives early growth, but staying power requires more than just fresh gimmicks.

If OpenAI continues updating Sora with longer clips, better dialogue, and new creative modes, it might keep the cultural momentum alive. Without that, the hype could fade as fast as it arrived.