Character AI used to feel like a space where creative interaction came first.

Whether you were roleplaying with a favorite bot, calling Santa for your kids, or practicing a new language during your commute, voice calls felt like a natural part of the experience.

Now, that feature is suddenly locked behind a subscription without any warning.

The rollout wasn’t announced. No pop-up. No changelog. Just a quiet switch. Free users are now blocked from a tool many used to connect with their bots in deeper, more personal ways.

The silence around it hit harder than the actual paywall. For a company whose entire product is communication, this felt like a slap in the face.

Some users never touched the voice feature. Others used it every day. But the bigger issue isn’t about how often it was used. It’s about how it was taken away.

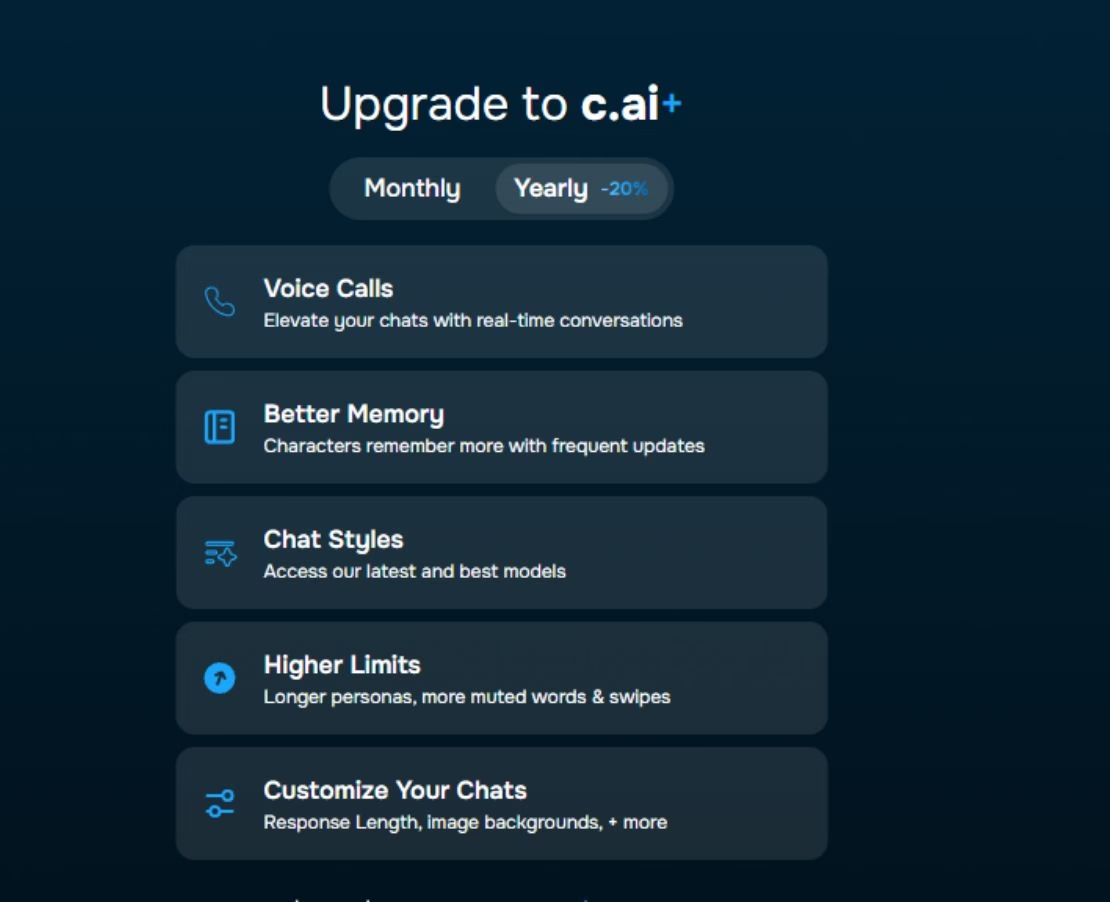

People woke up to find the option gone, with only a vague sales pitch about “elevated experience” on a new c.ai+ screen.

It wasn’t just a technical tweak. It changed the relationship between the user and the platform.

We’re now seeing frustration turn into mistrust. And the more users dig into what’s really included in that subscription, like “better memory” or “custom chat styles,” the more it feels like essential features are being turned into luxury perks.

So, how did we end up here?

People Aren’t Upset About the Paywall. They’re Upset About the Silence

This wasn’t just a pricing decision. It was a communication failure. When you take away a core feature that some users rely on for accessibility, comfort, or even daily routine, you need to say something.

But Character AI didn’t.

There was no blog post. No heads-up inside the app. Users found out by trying to make a call and getting blocked. Some even thought it was a glitch at first. Others assumed it was a bug tied to a recent update. That’s how unclear the change was.

What followed was confusion and speculation. Was it rolling out only on iOS? Was it account-specific? Were under-18 users blocked first?

The lack of clarity pushed the community into detective mode, trying to make sense of a feature that had just quietly vanished.

The frustration wasn’t just about the feature being gone. It was about being left in the dark. When companies treat their users like an afterthought, trust erodes.

This wasn’t just a missing button. It was a message, whether intentional or not, that your experience doesn’t matter if you’re not paying.

Voice Calls Weren’t Just a Gimmick. People Actually Used Them

One of the biggest surprises in all this is how many people shared real, personal ways they used voice calls. This wasn’t a novelty. It was a lifeline for some, a creative tool for others, and a routine comfort for many.

Here’s how users said they used voice calls:

-

Practicing spoken English or other languages without fear of judgment

-

Reenacting bot conversations with Bluetooth-enabled plushies

-

Helping with social anxiety by mimicking casual phone chats

-

Playing creative scenarios by connecting two bots on separate phones

-

Using calls as a calming tool during panic attacks or lonely walks

One parent even described using it to call Santa with their kids during the holidays. For them, this wasn’t an extra. It was the core of their experience.

It’s easy to dismiss voice calls as a niche feature. But real stories prove otherwise. And when a feature with so many meaningful uses disappears overnight, it doesn’t feel like a business decision.

It feels like something was taken away without care.

The Whole Point of Voice Features

There’s been a lot of debate about whether voice calls fall under accessibility or just convenience. The truth is, it depends on how they were used. For some, it was about multitasking. For others, it was about being able to use the app at all.

Users with visual impairments relied on calls to avoid reading long blocks of text. Some preferred speaking because of dyslexia or cognitive conditions that make reading difficult.

Others used voice as a way to interact during panic episodes or while recovering from overstimulation.

Accessibility doesn’t mean one feature helps everyone the same way. It means different tools exist to serve different needs.

Removing voice calls without a clear alternative means some users were simply shut out of the experience.

There’s also the legal side. Some commenters pointed to guidelines under the ADA and WCAG. While voice calls aren’t legally mandatory, apps are expected to provide equal access across features.

Stripping voice interaction behind a paywall might not be illegal, but it’s definitely a step away from inclusive design.

Character AI Is Starting to Feel Like It’s Charging for the Wrong Things

No one’s denying that AI is expensive to run. Users understand that free tools come with tradeoffs. But the frustration comes when it feels like those tradeoffs are hurting the core experience.

First it was memory. Then it was voice. Now people are wondering what’s next. Stickers? Typing indicators?

The feeling is growing that instead of enhancing the experience for paying users, Character AI is punishing those who stay free.

Users have started asking what they’re actually paying for. Is “better memory” a premium feature or just the basic functionality everyone expected?

Is personalization a bonus or just bait to pull people into a subscription?

Here’s the risk: When users feel like they’re being nudged into paying not for improvements but to restore what used to be free, they don’t upgrade. They leave.

And many are already talking about switching to other platforms entirely.

People Are Already Looking for Better Alternatives to Character AI

The shift toward locking features behind a paywall isn’t happening in a vacuum. Users are taking note, and many are already exploring alternatives.

Some named Candy AI and CrushOn AI as smoother, more responsive platforms that actually listen to feedback.

These tools aren’t perfect, but they haven’t been gutting the free experience to push subscriptions. That alone puts them ahead in the eyes of many users. People want to feel respected, not manipulated.

When users leave, it’s rarely over a single feature. It’s about the feeling that the platform is changing in ways that don’t reflect the needs of the community.

Losing voice calls is just one example, but it represents something bigger. Character AI is losing touch with what made it special.

There’s still room to rebuild trust, but it starts with transparency.

If a feature is going to be removed or changed, say so. If something is meant to be part of a premium tier, make the value clear. Don’t just take things away and hope people stay quiet.

This Isn’t Just About Voice Calls. It’s About Respect

At the heart of all this is a simple ask: communicate with users. Not just when launching a new feature, but when changing how the app works at a basic level.

That’s the minimum people expect when they give their time and in many cases, money, to your platform.

Voice calls were a personal, creative, even therapeutic part of the Character AI experience. Taking that away with no warning sends a message that the team isn’t paying attention. And users feel it.

Whether you used the feature every day or never touched it, the way it was handled matters. It’s a sign of how future updates might be rolled out. And for many, it’s a dealbreaker.

If Character AI wants to keep its user base, it needs to stop taking silent steps backward.

The community has shown it’s willing to support great tools. But only if the people behind those tools respect the experience that brought everyone here in the first place.