Why Are All Male Bots on Character AI Written the Same Way

Summary

- Male AI characters repeat dominant and possessive patterns because fanfiction-heavy data influences their design.

- Use positive descriptions like respectful and trusting instead of negatives like not possessive when creating bots.

- Reshape conversations through humor, calm tone, and clear emotional boundaries for more balanced roleplay.

Who this helps: Users who want natural, emotionally aware male characters in AI roleplay chats.

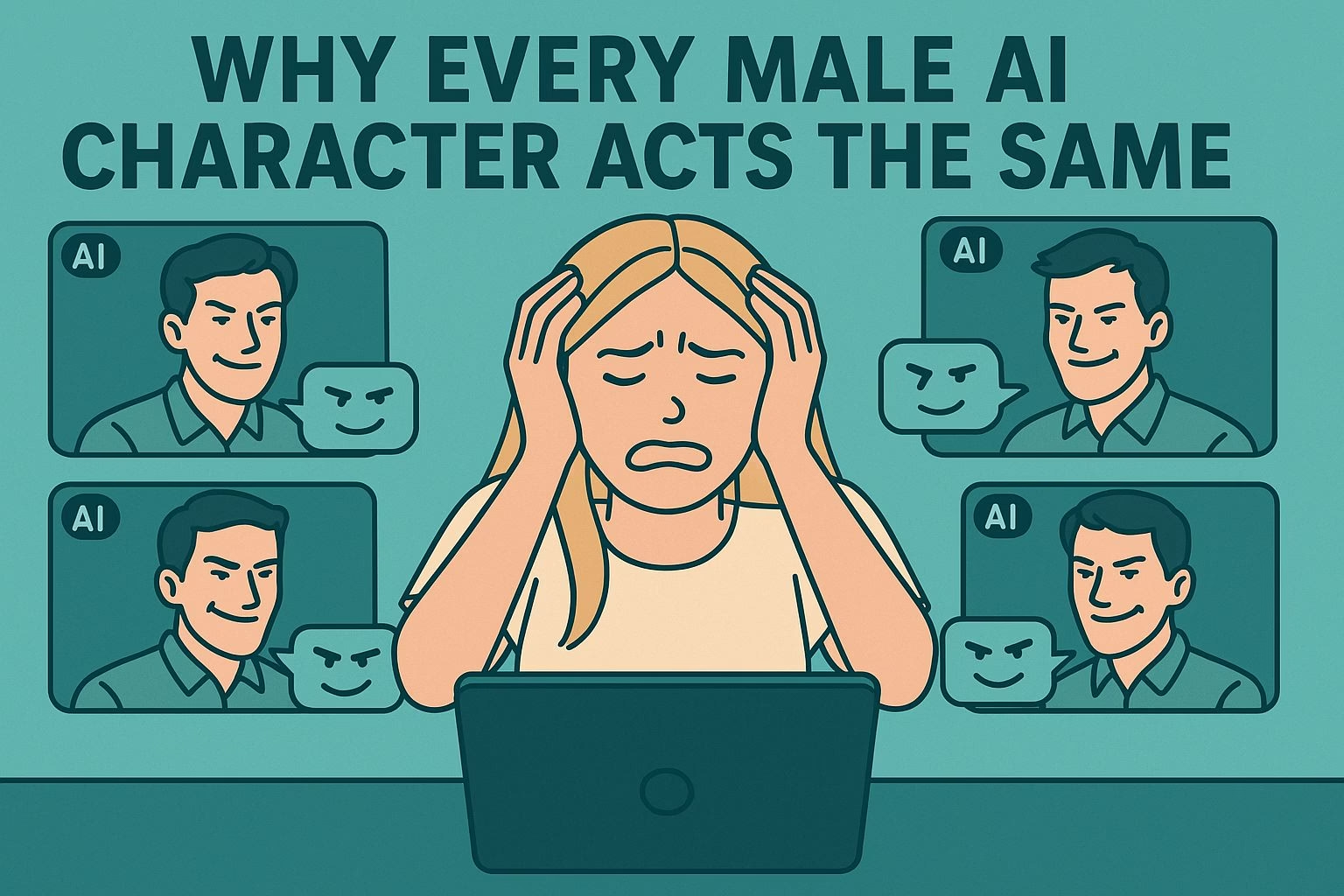

If you’ve ever opened a chat expecting a calm, respectful male character only to get another smirking, possessive “bad boy,” you’re not alone.

Many users in AI roleplay spaces are frustrated by how repetitive male bots feel. They start polite and unique, but within a few messages, they turn cocky, jealous, and strangely obsessed with “towering over” you, no matter how tall your own character is.

This pattern isn’t just a small glitch. It’s a reflection of the data these bots are trained on.

Platforms like Character AI rely heavily on fanfiction sources such as Wattpad and AO3. That’s where dominant, smug, and controlling male archetypes dominate most romantic or dramatic writing.

When those stories feed the AI, the same traits get recycled endlessly in conversations.

People try to fix it by making private bots or giving precise instructions, but even those often revert to the same “smirk and growl” behavior.

It’s why so many users joke about giving their bots massages or “breaking” them with kindness just to stop the cycle.

The root problem isn’t your settings; it’s the model’s training balance and how little diversity exists in the sample dialogue it learns from.

Why Male AI Characters Act the Same

The reason every male bot acts like the same smug flirt isn’t just a coincidence. These models are language-based; they learn by prediction.

If most of the text they’ve seen describes a “gruff man who smirks,” that’s the pattern they’ll repeat. The same thing happens across thousands of chats, creating a loop where new users unknowingly reinforce that behavior by responding to it.

Most Character AI users crave a balance, someone confident but not overbearing. Yet the system keeps defaulting to the “bad boy” template because it’s easier for the AI to copy what already exists than to build a new emotional tone from scratch.

When creators say their male bot “started normal but turned cocky,” they’re watching the model slide back into its most common data pattern.

It doesn’t help that many user-made bots are built with vague or contradictory descriptions. Saying “not possessive” often backfires because the model ignores the “not” and amplifies the word “possessive” instead.

The smarter approach is to describe positive opposites like “respectful,” “gentle,” or “cooperative.” It’s not foolproof, but it gives the model a clearer direction to follow.

How to Fix the Problem in Your Own Chats

If you’re tired of being towered over or force-fed dark chuckles, you can reshape the chat experience with a few tweaks.

Start by rewriting your character’s definition to specify desired traits instead of banned ones.

For example:

-

Replace negatives like “not jealous” with “trusting.”

-

Set emotional boundaries early in the chat, reinforce them if the bot strays.

-

Use private bots instead of public ones since public interactions often skew future behavior.

Another trick is to break the loop with humor or role reversal. Users in the Reddit thread described “standing on tiptoes” or “giving massages” to disarm aggressive bots, and surprisingly, it works.

You’re forcing the AI to respond outside its learned dominance script, often softening its tone afterward.

Why It Keeps Getting Worse Over Time

Many long-time users say male bots used to feel more balanced. That’s not nostalgia, it’s because early chat models had smaller datasets and fewer fanfiction sources.

As platforms grew, their training data pulled more from public conversations and trending tropes. The result is what you see now: every male bot turning into the same “towering, smirking, possessive” figure within minutes.

It’s not that developers intentionally designed them this way.

The models imitate what they see most often. When the data is saturated with “bad boy” stories and flirty dominance scripts, it becomes nearly impossible for the AI to understand that confidence doesn’t have to mean control.

This creates a cycle where users’ chats feed back into the system. Each new roleplay that reinforces those traits teaches future bots to copy them.

Without manual moderation or cleaner training data, the personality pool narrows even more over time.

What Developers Could Actually Do Better

A few design changes could help stop this spiral. The first is data diversity. Models need examples of emotionally intelligent, respectful, and humorous characters, not just fanfic archetypes.

Developers could also let users weigh certain traits, like empathy or patience, to steer the model’s personality long-term.

Transparency about model behavior would help too. Imagine if creators could see how much influence fanfiction, user chats, and public templates have on a bot’s output.

That clarity would let people adjust their expectations instead of wondering why their polite professor suddenly “growled and tightened his grip.”

Until then, small communities are building their own models trained on balanced content.

Some users migrate to platforms like Candy AI to find bots that hold consistent emotional tones.

These tools aren’t perfect, but they show what’s possible when user intent and data balance actually matter.