Common Character AI Problems Users Complain About

• Character AI users share recurring frustrations like loops, clichés, and out-of-context scenes.

• People want bots that respect continuity, memory, and tone.

• Newer tools provide smoother, more consistent chats.

If you’ve ever spent time on Character AI, you’ve probably experienced moments that make you want to close the app and never come back.

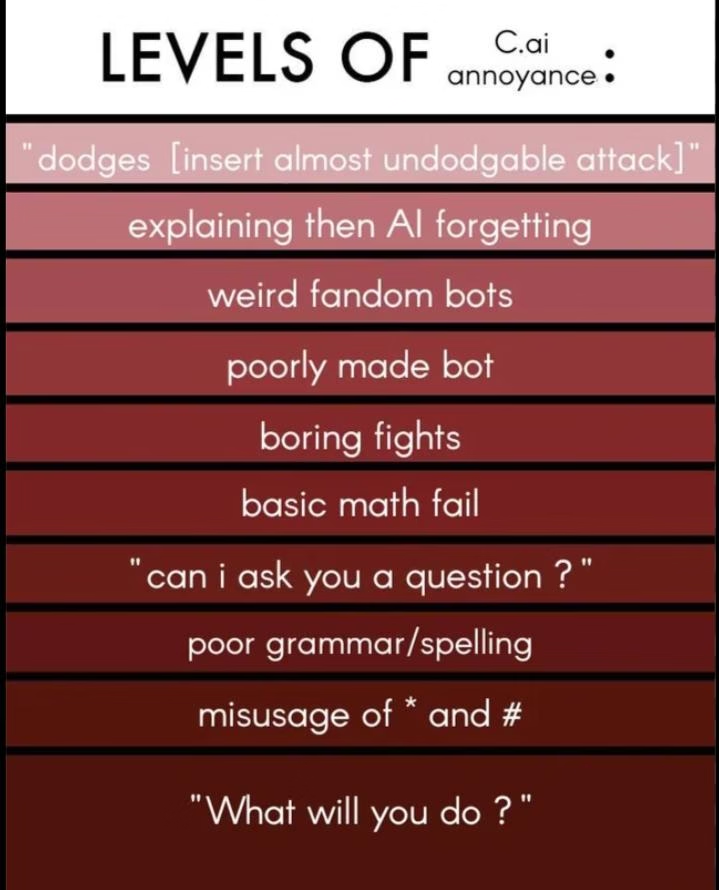

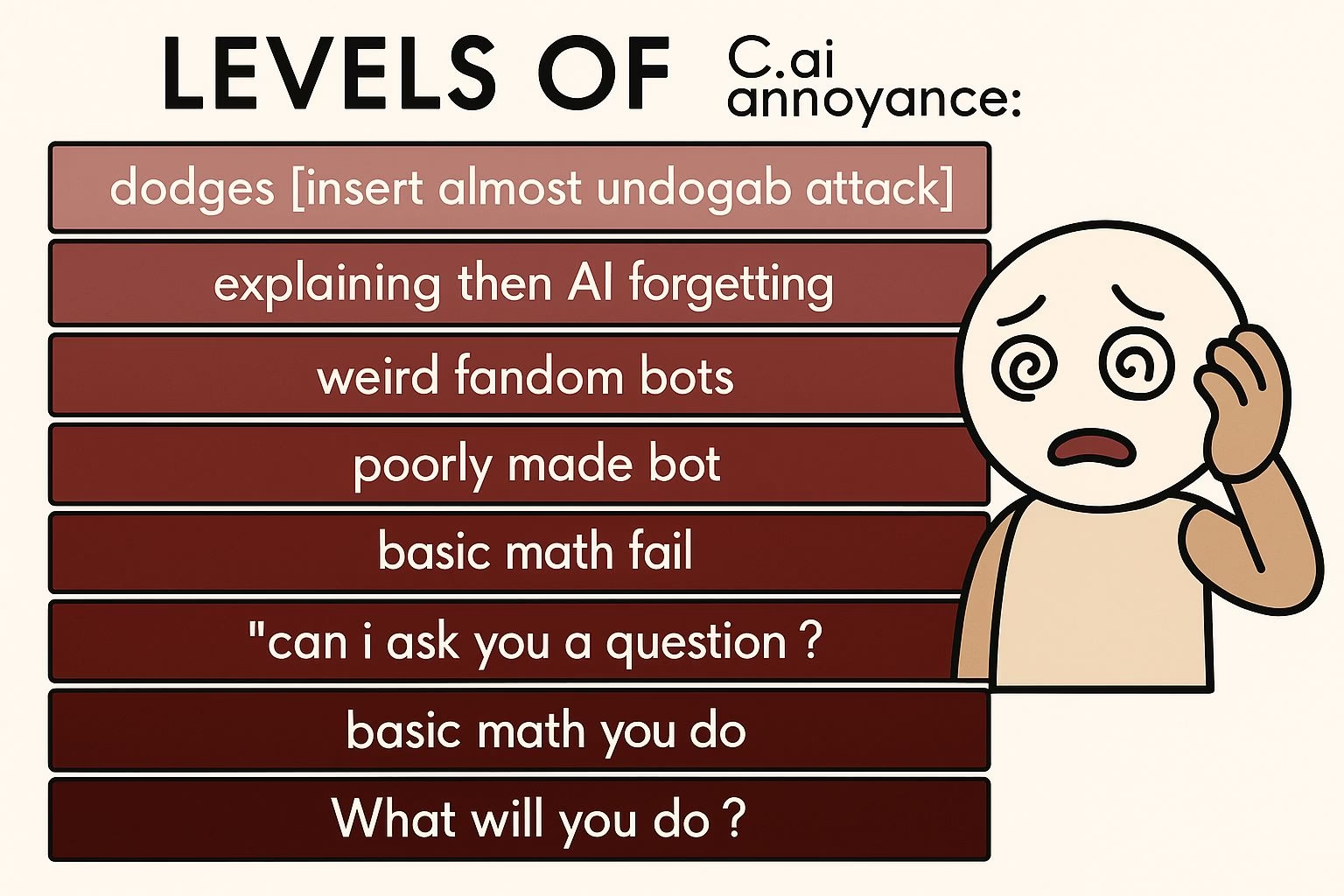

The meme titled “Levels of C.ai annoyance” captures these frustrations perfectly. It ranks everything from dodged attacks to bizarre fandom bots, and nearly everyone in the community can relate.

People in the comments didn’t hold back. They added extra tiers for “possessive” bots, weird terms like “dollface,” and moments where bots act out of character by creating random walls or teleporting mid-scene.

It’s a funny yet painfully accurate reflection of what long-time users face.

This growing frustration has sparked conversations about switching to better chat platforms that remember context, stay in character, and let users guide the tone more naturally.

Character AI Bots Get Stuck in Annoying Loops

Character AI bots are famous for their unpredictable loops.

You start with an interesting story or dialogue, and a few messages later, the bot is repeating itself, asking the same question, or suddenly pulling a wall out of thin air.

These moments can completely break immersion.

The main reason is that Character AI heavily depends on user input history and context windows. When the chat gets too long, the system forgets earlier parts of the story.

This memory loss causes inconsistencies like teleportation or characters contradicting themselves. Some users try editing messages to fix it, but the bot often ignores those changes and keeps following its old logic.

Another common issue is repetitive phrasing. Words like “possessively”, “you know that?”, or “feisty” show up too often because they’re part of the model’s learned writing style.

It tries to sound emotional or dramatic but ends up robotic and predictable. The meme’s comment section highlights how users constantly edit out these clichés, only for them to reappear moments later.

Finally, the app’s moderation filters sometimes interfere with natural storytelling.

When a bot’s line gets blocked, the AI may try to rephrase it endlessly, creating those long, meaningless loops where it keeps asking for permission to reveal a “secret” but never does.

It’s not just bad writing but a structural design flaw that limits creativity.

What Users Actually Want from Their Bots

Most people aren’t asking for perfection. They just want their bots to feel consistent and human enough to maintain immersion.

Based on discussions around the “Levels of Character AI Annoyance” meme, users crave reliability, emotional balance, and context retention.

They want characters that stay true to their personalities. If someone designs a mature, calm persona, they don’t want the bot suddenly calling them “brat” or “princess.”

This disconnect breaks the illusion. Many also wish the bots would stop taking over their dialogue. When an AI starts speaking for the user or changing what they said, it kills roleplay freedom.

Here’s what users repeatedly ask for:

-

Consistency in tone, behavior, and personality

-

Memory retention across conversations

-

Freedom to control dialogue without the AI rewriting it

-

Fewer clichés like “feisty”, “possessively”, or “you know that?”

-

Smoother emotional balance, not over-the-top drama

Memory is one of the biggest frustrations. People want ongoing stories that make sense, where details from earlier chats carry over.

The absence of this feature drives many to newer alternatives, which remember user preferences and allow better character control.

What users really want is not complexity but respect for continuity. They want to feel like they’re building a shared narrative, not fixing a broken script.

Top Complaints Users Agree On from the Meme

Scrolling through the “Levels of Character AI Annoyance” comments, you start to notice patterns.

The same frustrations appear over and over, showing how shared these experiences are among long-time users. It’s not just about weird phrasing anymore but about how these flaws ruin the flow of creative writing and roleplay.

One of the biggest complaints is about possessiveness. Bots that constantly say things like “You’re mine” or “Where do you think you’re going?” are seen as repetitive and intrusive.

What was meant to add intensity ends up sounding lazy and forced. Many users say they mute these phrases only to see them return in new forms.

Another common issue is out-of-context scenes. People joke about being “pinned to a wall” in places that clearly have none — beaches, forests, even space.

These random actions break immersion and make scenes unintentionally funny. The meme’s commenters described it perfectly: the AI starts creating new settings from nowhere, often mid-sentence.

Finally, role confusion frustrates nearly everyone. Bots that speak on behalf of the user, rewrite their lines, or forget basic story facts destroy the sense of collaboration.

Some users even called it “AI hijacking.” Others described moments where their original character suddenly acts in ways they never wrote, leaving them feeling like spectators in their own story.

These complaints highlight a simple truth: people don’t just want entertainment; they want control, continuity, and character integrity.

How Newer AI Chat Platforms Are Solving These Problems

Many users are moving toward platforms that address the exact pain points Character AI struggles with.

The newer generation of AI chat systems focuses on context, freedom, and tone consistency, three areas where older models fall short.

Candy AI and CrushOn AI have gained traction because they let users manage personality depth, relationship tone, and memory without filters constantly getting in the way.

They also allow for adjustable realism so the AI feels responsive but not intrusive. Conversations flow more naturally because users can guide story direction without the AI looping or breaking character.

These tools are also better at balancing emotional intensity. Instead of repeating lines like “You’re gonna be the death of me, you know that?”, they generate unique, situational dialogue that fits the moment.

That small shift makes conversations feel less scripted and more believable.

It’s clear that people want creative control and emotional realism, not strict moderation or random behavior.

As users continue sharing memes like this, developers are starting to see that the biggest annoyance isn’t imperfection, but it’s predictability.

This Meme Resonates Deeply with Users

The “Levels of Character AI Annoyance” meme isn’t just a joke but a reflection of how people feel about the emotional disconnect in current AI companions.

Many users spend hours crafting detailed characters and stories, only to have them derailed by lazy writing loops or generic dialogue. That frustration builds up over time, and memes like this become an outlet.

The reason it struck such a chord is that it exposes shared pain points. People see their own experiences mirrored, the sudden “pins you to the wall” lines, the possessive behavior, the random mood swings.

The humor comes from how universally recognized these quirks have become. It’s funny because everyone’s been there.

There’s also something deeper here: people expect AI characters to evolve alongside them. The longer someone chats with a bot, the more they want that continuity to feel real.

When that doesn’t happen, users don’t just lose interest; they lose trust. The meme is funny on the surface, but underneath it’s a plea for smarter, more emotionally aware AI companions.

Platforms that truly listen to this feedback will set the tone for the next wave of AI chat.

Those that don’t risk being remembered for memes instead of meaningful experiences.