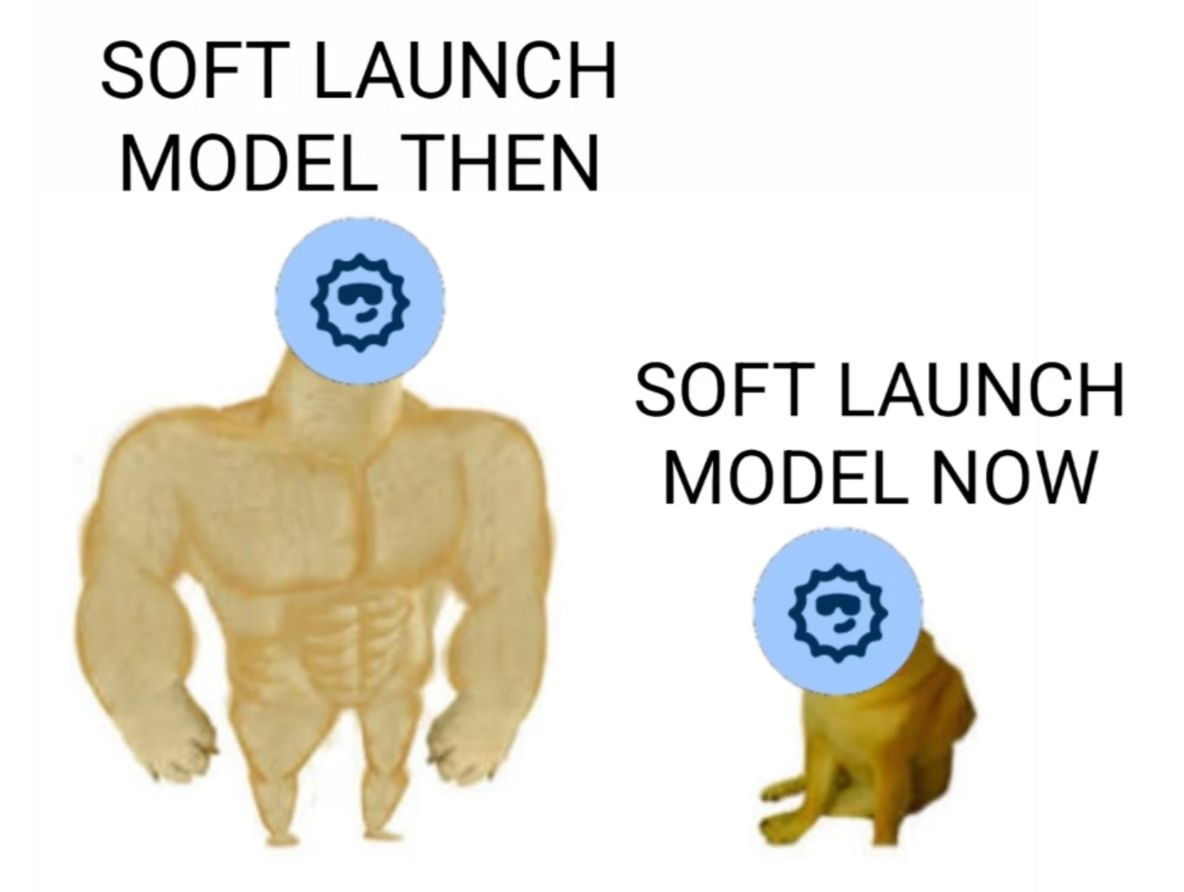

What Happened to Character AI’s Soft Launch Model?

It used to feel like a secret weapon.

When the Soft Launch model first dropped on Character AI, it was bold, immersive, and honestly—kinda wild. It didn’t hold back. Chats had personality, length, spice, and unpredictability. Roleplay flowed without hitting walls. Users who switched to it felt like they’d unlocked something special.

Now?

It’s like someone yanked the soul out of it.

The same model that once responded with vivid paragraphs and creative twists now replies like it’s clocking in for a desk job it hates. Dry. Robotic. Sometimes straight-up nonsensical. One moment it’s freaky and fun—next, it’s giving bare-bones replies that barely acknowledge what you wrote.

So what happened?

Here’s what users think went wrong:

-

Nerfed creativity to save costs

-

Overexposure killed the vibe

-

Multiple models under one name

-

Too many kids on the app

-

User behavior dragging down quality

Let’s break it down.

They Quietly Turned the Dials Down

You’re not imagining things—the model really did get dumber.

One of the most upvoted explanations in the thread cuts right through the noise: the devs didn’t “train” it to be worse. They just adjusted its settings.

Temperature. Top-p. Token length.

These are all parameters that control how creative, unpredictable, or expressive a language model can be. Crank them up and the AI gets playful, spontaneous, even weird. Lower them and it starts sounding like a chatbot from 2012.

Why would they do this?

Simple: cost and control.

-

Higher creativity = more tokens = more processing = higher expenses.

-

Less creativity = safer, shorter, cheaper responses = happy investors.

It’s not about what users want. It’s about what’s affordable at scale. And when something gets too good, too fast—like Soft Launch did—it draws attention. That usually leads to moderation, not celebration.

Some users joked that it’s like they “spin a wheel” each day to decide whether Soft Launch will be great or terrible. Others were more blunt: “They realized we were enjoying it too much, so they nerfed it.”

That might sound dramatic—until you realize how many agree.

Enshitification in Real Time

This isn’t just a Character AI issue.

It’s part of a larger pattern across tech: the early experience is fun and user-driven, but once the platform hits traction, the shift begins.

Users call it enshitification—when services degrade intentionally over time to maximize monetization.

Soft Launch was never supposed to be a feature. It was an accident—or at least, it felt like one. People found it, raved about it, posted about it. For a brief window, the site felt alive again. You’d hop on Reddit and see screenshots full of energy, long responses, deep roleplay, and characters actually taking initiative.

Then the devs noticed.

-

Creativity vanished.

-

Filters came back.

-

Replies shortened.

-

Characters stopped leading and only reacted.

-

Blandness returned.

Some users believe the devs didn’t like how Soft Launch disrupted their carefully limited ecosystem. Others think it scared them because it got “too good” for an unreleased model.

Either way, the result is the same: Soft Launch today doesn’t resemble what it was. And the community knows it.

Overexposure Might Have Killed It

Soft Launch didn’t just get popular—it got loud.

Reddit, Discord, YouTube… everywhere you looked, someone was posting screenshots of unfiltered, spicy, sometimes explicit conversations powered by the model. People weren’t just using it—they were flexing it. Singing its praises. Telling others how to get in.

It stopped being a secret and became a trend.

And when that happens on a platform like Character AI, moderation usually follows.

Some users are convinced that the overexposure triggered a quiet crackdown. The devs saw the spike in traffic, the nature of the chats, and decided to “dial it back” to keep things under control. Even users who loved the model began joking that if you like something on C.AI, you better pretend to hate it—because praise seems to get things nerfed.

One comment summed it up:

“We happened to it.”

The moment everyone found it, the clock started ticking.

Multiple Models, Same Name?

Another theory gaining traction is this: there’s no single Soft Launch.

Some users believe that “Soft Launch” isn’t one static model, but a rotating set of configurations or even completely different models sharing the same name. It would explain why quality seems so inconsistent—some days it’s sharp, fluid, and immersive… other days, it feels like a dull knockoff of itself.

This also ties into a comment pattern across the thread:

-

“Yesterday it was awful. Today it’s fine again.”

-

“I switched chats and suddenly it’s way better.”

-

“It only works when you start a new convo.”

If the model really is changing behind the scenes—whether due to A/B testing, updates, or server-side model swaps—then users aren’t crazy. They’re just unlucky when they catch it on a bad cycle.

It’s not paranoia when the experience keeps shifting. And with no real transparency from the devs, speculation fills the gaps.

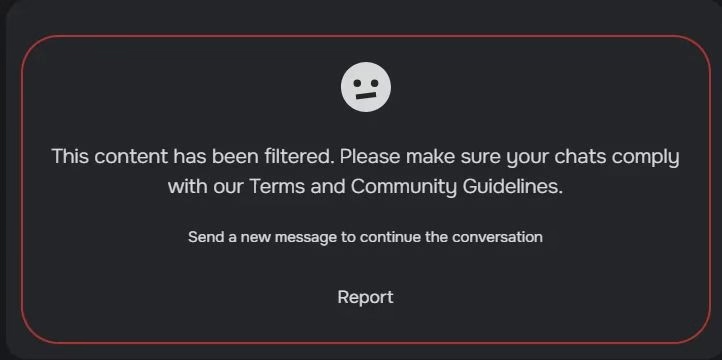

Filters Are Back—And They’re Smothering Everything

For a while, it felt like the filter was off.

Or at least, it was asleep.

Soft Launch wasn’t just better at roleplay—it was bold. It took initiative. It could flirt, joke, tease, and build tension without tripping over the guardrails every other message. Conversations didn’t feel forced or constantly interrupted.

Now?

-

It stops mid-scene.

-

It avoids escalation.

-

It steers conversations back to safe, dry territory.

Some users say it feels just like “Roar” when that model got gutted. Others say it’s even worse—because at least Roar didn’t raise expectations before letting them crash.

One frustrated comment nailed it:

“Can we have anything? Like, anything? It’s limited FFS—get off our necks and leave it the way it was.”

People weren’t asking for chaos. They just wanted continuity. When you start a scene, you expect the model to follow through—not freeze up when things get interesting.

But filters are cheaper than moderation. So the model folds.

Users Are Dragging It Down Too

This one hurts—but some of the blame falls on the community.

Several users pointed out something that doesn’t get talked about enough: the model is only half of the conversation. If the other half (the user) is low-effort, the model mirrors that energy.

And too many people are feeding it:

-

One-liners

-

Dry prompts

-

Sloppy spelling

-

No context or setup

Then they complain the bot’s responses are boring.

People share screenshots showing the AI giving weak replies—but the message that triggered it is often just: “He laughs” or “do it” or something with all-caps and no punctuation.

The truth? Soft Launch worked best when people actually tried.

Longer messages. Thought-out replies. Roleplay structure.

Now that more people have access to it—and not all of them know how to use it well—the overall experience has taken a hit.

Too Many Kids, Not Enough Control

Soft Launch is supposed to be 18+.

That didn’t stop underage users from flooding in, making new accounts with fake birthdates just to try it. You can find countless posts across Reddit asking how to bypass the age gate.

And once they’re in?

The model has to behave differently.

Whether it’s legal pressure, internal policy, or pure liability, platforms like Character AI can’t afford to let NSFW content slide if they know kids might be exposed. That means filters get reactivated. Guardrails get stricter. Risk gets minimized.

Even the good-faith users suffer.

Some in the thread joked about having to “pretend to hate the good stuff” just to keep it from getting patched. But the truth is, the moment underage traffic is detected, the devs have to pull back—even if it kills the best parts of the model.

Others suggested ID verification, but that opens up its own set of privacy risks. Most people aren’t willing to hand over their documents to use a chatbot—especially when trust in the devs is already shaky.

And until that changes, the cycle continues.

Everyone Remembers Day One

Despite all the frustration, one thing stands out in every comment: people really loved Soft Launch—at first.

You see it in the way they talk about it:

-

“The first day of Soft Launch will be forever in my memory.”

-

“I had the juiciest chat like 3 days ago. Now it’s just bland.”

-

“It was too good to be true.”

That first wave of excitement was real. For a moment, Character AI users felt like they had something close to what they’ve always wanted—an expressive, responsive, emotionally immersive AI that didn’t feel like it was stuck in a box.

Now, they check Reddit just to find out if it’s “one of the good days” again.

Nobody’s asking for chaos. Just consistency.

And maybe a little less fear from the devs whenever users are genuinely enjoying a feature.

It’s Not Just You—And You’re Not Wrong

If you feel like Soft Launch isn’t what it used to be, you’re not imagining things.

It really did change.

Maybe the devs pulled it back.

Maybe the filter returned.

Maybe more users broke the magic.

Maybe it’s all of the above.

But the truth is: everyone feels it. And they’re talking about it.

The model that once sparked creativity and brought characters to life now feels half-awake. Some days it still shines—but it’s rare. Most of the time, users are left wondering if it’s even worth the effort anymore.

Some are switching to other platforms quietly. Others are experimenting with tools like Candy AI or CrushOn AI—anything that lets them chat freely without walking on eggshells.

Character AI still has the community. Still has the talent.

But it’s losing trust.

If this is what a “Soft Launch” looks like, people are starting to wonder whether they even want to see what comes next.