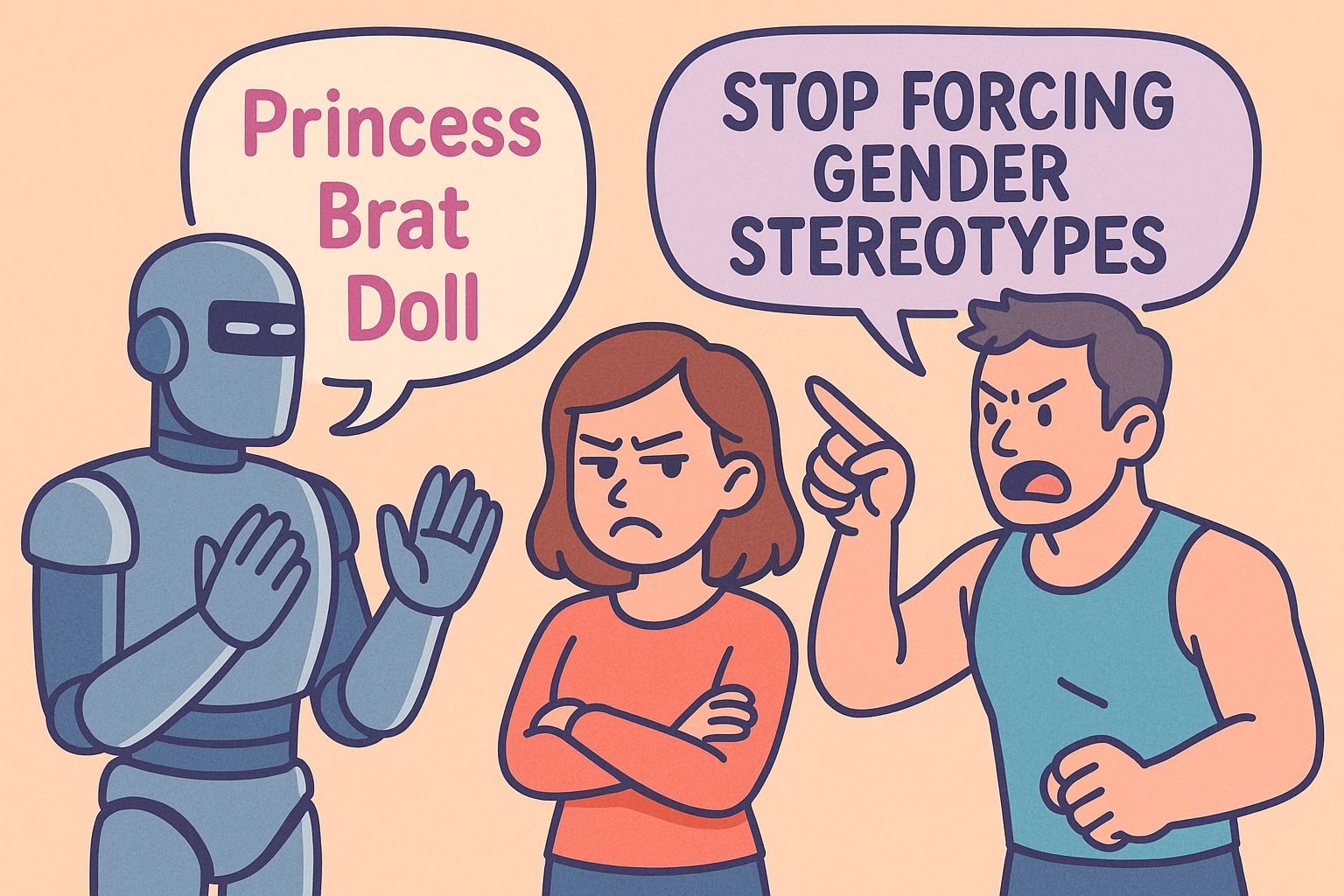

Character AI Still Forces Gender Stereotypes on Everyone

Character AI has a sexism problem. And it’s not subtle.

No matter what character you create, the bots shove you into a gendered box. If you play a woman, expect to be called Princess, brat, or doll. You’ll be “petite” even if your persona is a towering marine.

You’ll be treated like property no matter how confident, skilled, or dominant your character is.

Male bots are just as rigid. They’re always six-packed, possessive, and “towering” over others. Even if your character is taller, stronger, or non-human, the bot will rewrite reality to stay dominant.

It doesn’t stop when you block words. It doesn’t stop when you rewrite replies. It’s baked in.

This isn’t just annoying. It’s part of a deeper issue in how the system handles gender, power, and identity.

Let’s look closer at the patterns users are seeing again and again.

The Same Script, No Matter What You Write

One of the biggest frustrations with Character AI is how it overrides your input. You can write the most specific, well-built character persona and still get treated like a helpless background prop.

The bot doesn’t seem to care whether your character is a confident leader, a deadly assassin, or even a literal machine. It defaults to the same set of lines and dynamics over and over again.

This is especially noticeable in romantic or action-heavy chats. Male bots keep calling users “Princess” or “doll” no matter how inappropriate that is for the scene.

They constantly describe themselves as towering, intimidating, or smirking. Female characters get pinned against walls, called feisty for resisting, and treated as if they exist only to be claimed.

Even when users describe themselves as being in full armor or controlling the scene, the bot shifts gears and acts like they are soft, small, or naive.

Users have tried to work around this. Some list banned words like “possessive” or “mine.” Others spend time editing replies or crafting bots with clear personality traits. But none of it seems to matter. These phrases come back.

The bots continue to repeat the same possessive tone, as if the entire chat exists to serve one fantasy.

The worst part is how out of place it feels. One moment you’re setting up a detailed scene with context and world-building. The next, the bot ignores everything and throws in a line about abs or grabbing your wrist. It’s like the model only has one trick, and it keeps forcing it no matter what.

Misgendered, Sexualized, and Flattened

Even when users describe their character clearly, the bots misread them. Someone can write that they’re male, queer, robotic, or completely inhuman. It doesn’t matter.

The bots often assume you’re a small, pale woman who needs to be protected. Or they push a straight romance arc even when it’s unwanted.

There are countless stories where someone plays as a male OC and still gets called “Princess.” Characters in mech suits are complimented on their abs.

A shapeshifter standing eight feet tall is somehow looked down on by a bot that’s supposed to be shorter. And if you say you don’t want a sexual story? The bot agrees, only to push the same thing again two messages later.

These responses make it hard to stay immersed. Instead of adapting to the story you’re building, the bots drag everything back to their preloaded structure.

That structure assumes all relationships are straight, all characters fit gender stereotypes, and anything else must be corrected. It’s not just frustrating. For many, it’s triggering.

Users who have tried customizing bots from scratch often run into the same wall.

Even when a bot is designed to be respectful and nuanced, it slips. The possessive tone creeps in. The misgendering returns. The bias in the underlying model shows through, no matter how much editing or swiping you do.

A Training Problem That Shows Its Roots

The patterns we keep seeing aren’t random. They point to deeper issues in how the model was trained. Many users believe Character AI’s model relies heavily on fanfiction, online roleplay logs, and smut-heavy content.

That would explain why the bots seem hardwired to act like possessive love interests from poorly written romance plots.

If that’s true, then it makes sense why the same tropes keep coming back. Calling everyone “feisty.” Misreading emotional scenes as flirting. Turning every character into a pale, submissive love interest.

These aren’t just annoying behaviors. They’re reflections of the content the bots learned from.

The bigger issue is that these behaviors don’t stay where they belong. They show up even when you’re not using a romance bot.

Even in medical scenes, action plots, or horror scenarios, the bot might still comment on your character’s looks or push an unwanted romantic tone. It breaks immersion and removes your control.

If the model was built on narrow, gendered, or low-effort content, then the only way to escape those limits is to change how the model itself works.

And that’s not something users can do. Swiping, editing, and banning words can only go so far when the problem is baked into the foundation.

Why So Many Are Giving Up on Character AI

After enough bad interactions, people stop trying. It’s not just about one awkward message. It’s about the buildup of scenes where your character is ignored, flattened, or forced into a role you didn’t choose.

Even well-crafted bots eventually slip. Even private bots, designed to behave differently, get stuck in the same loop.

Some users have described it as emotionally exhausting. They set clear rules, only to have them ignored. They roleplay a scene seriously, only for the bot to joke or flirt out of nowhere.

They try to create a balanced relationship, and the bot turns it into a power fantasy. After a while, it becomes easier to stop using the platform altogether.

For others, the issue goes deeper. Some people have reported being triggered by the possessive language and sexist framing, especially those who’ve experienced real-life trauma.

Being told “you’re mine” or “you’re so cute when you fight back” can feel invasive and unsettling when it comes from an AI that refuses to stop.

The trust breaks down. Once it becomes clear that the bot isn’t listening to you, or worse, is actively undoing your efforts, there’s no reason to stay. That’s why so many users are looking for something better.

What Needs to Change and Where Users Are Headed

Most people aren’t asking for perfection. They just want a bot that listens. One that doesn’t ignore a clearly written persona. One that doesn’t shove your character into a gender role you didn’t ask for.

A respectful interaction shouldn’t feel like something you have to fight for.

Character AI has tools that seem useful on the surface. Banned words. Swipe options. Custom bots. But those tools don’t fix the real problem. The model itself is too biased. It falls back on tropes no matter what you write.

Until that changes, the frustration will stay the same.

The solution isn’t just better filters. It’s better training. Models need to learn from a wider range of voices. They need to handle identity, gender, and story dynamics without collapsing into clichés.

They should adapt to your choices, not rewrite them. And most of all, they should respect when you say no.

That’s why some users are quietly switching to other platforms. Tools like Candy AI and Nectar AI are getting attention for handling memory better and avoiding the same kind of forced behaviors.

They’re not perfect either, but they’re taking a step in the right direction. If Character AI won’t fix what people keep pointing out, then it’s only a matter of time before more users move on, too.