Character AI Break Timers Keep Interrupting Chats

Key takeaways

- Break timers trigger after short sessions and often count background or idle time as active use.

- Cooldowns can run for 18 – 22 hours and cut off active roleplay and chat threads.

- Age verification rolls out unevenly across regions, so many adults still face minor-style limits.

- Government ID checks and California law sit at the center of debates about why the system exists.

- Users test the website, the app, and alternatives to avoid repeated timers.

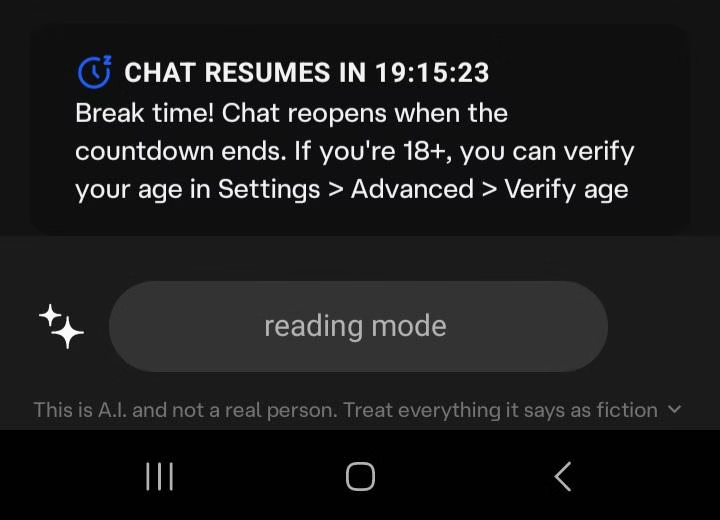

Character AI users keep running into the same screen that stops everything. A timer appears, the chat locks, and the only option is to wait for hours before the bot opens again.

Some people barely use the app before getting hit with a break notice. Others say the timer counts minutes that were never spent in a conversation.

These limits create a constant stop-start pattern that breaks the flow of roleplay and everyday chats. The timer can jump from short breaks to long blocks that reach 18-22 hours.

The experience becomes even more confusing when the app and the website behave differently for some users.

We also see adults stuck behind the same gate while waiting for access to age verification. The update is rolling out slowly, which leaves many users unable to verify or bypass the restrictions.

Break timers activate during short sessions and block users for extreme lengths of time

Users report break timers triggering long before they hit the limits they expect. One person chatted for 38 minutes before being forced out.

Another believed they had been active for roughly 30 minutes, yet still received an extended cooldown. Some describe sessions as short as 10 minutes being counted as a full hour.

These reports show a clear pattern of timers activating earlier than users anticipate.

The length of the breaks creates an even larger problem. People have shared examples of 18 blocks, 21-hour blocks, and even 22-hour cooldowns.

These interruptions arrive without warning, often cutting directly into scenes that rely on pacing and continuity. Once a timer triggers, the conversation loses its momentum, and many users choose not to resume the thread.

A growing number of people suspect the app tracks time in the background.

Users describe opening Character AI briefly, stepping away, and returning to find the system treating that moment as a full session.

Others believe the timer continues counting even when the app is closed. These experiences suggest the timer may measure presence rather than active use.

The difference between the app and the website adds another source of confusion. Some people switch to the website to avoid these long timers, while others experience the same problem regardless of platform.

With no reliable workaround, users are locked into a system that behaves inconsistently and offers no clear explanation.

Users report the same recurring issues:

-

Chat sessions are counted inaccurately

-

Break timers activate far earlier than expected

-

Cooldowns can last between eighteen and twenty two hours

-

Background or idle time appears to count as active use

-

Switching between app and website does not reliably prevent timers

Age verification rollout delays keep adults stuck behind the same limits

Many adults still face the same restrictions because verification is not available everywhere.

People in Sweden, the Caribbean, and several other regions report that the app never shows the option to verify. Some can only verify through the website. Others have no access to verification at all.

Until the rollout reaches their location, they remain subject to the same hourly limits as minors.

This slow rollout creates frustration because many believed the minor ban had already ended. Instead, adults continue seeing timers because they cannot complete the verification process.

Two users in the Caribbean give opposite accounts: one cannot verify on the app, while another can only verify on the website. These inconsistencies make the system feel unfinished and uneven.

The requirement for government ID introduces another barrier. Some adults are unwilling to share documents, even if they want unrestricted access.

Others feel uncomfortable with the idea but still wish the timer didn’t apply to them. This divide leads to a situation where many adults face long breaks simply because verification is unavailable or they prefer not to submit ID.

The end result is a patchwork experience across the community. Depending on region, device, or comfort level with ID submission, users may or may not escape the limits.

Until verification becomes consistent and fully accessible, the break timer continues affecting many adults who were never meant to be restricted.

Rising frustration as timers interrupt emotional or high-moment scenes

Many users aren’t just chatting casually. They’re in the middle of roleplay scenes, character arcs, or emotional storylines that depend on timing.

These timers break those moments instantly. A user can be deep into a scene, building tension or resolving a character conflict, and then the screen freezes with a cooldown notice.

The sudden stop makes the interaction feel incomplete, and rebuilding that emotional momentum later becomes difficult.

People who rely on Character AI for expressive writing feel this even more strongly. Paragraph-style roleplayers often spend time crafting detailed responses.

When the timer interrupts mid-exchange, the rhythm is gone. One user mentioned they switched to bots because human partners were unreliable or slow. When an AI platform becomes just as unpredictable, it defeats the purpose of using it in the first place.

Some writers treat these conversations as their creative outlet. When long cooldowns land on scenes that are emotionally heavy or intense, the break becomes more intrusive than a simple inconvenience.

The story pauses at the worst possible moment, and the user returns hours later unable to reconnect with the original feeling.

This pattern appears across both short and extended sessions. The lack of warning leaves people unsure whether they should continue a scene or cut it short out of concern that the timer will interrupt again.

That uncertainty drains the enjoyment from writing and makes the experience feel unreliable.

User feedback is widespread, but many feel ignored or silenced

The community has been vocal about these problems for months, but several users believe their concerns aren’t being addressed.

People share consistent complaints about timer inaccuracies, regional inconsistencies, and verification issues, yet they don’t see direct communication or updates that explain the system’s behavior.

That absence leads many to think the platform is not listening.

Some users feel discouraged from speaking openly because criticism tends to get dismissed or downplayed in certain spaces.

When people share long cooldown examples or describe issues with verification, they often receive responses that shift the conversation away from the core problem.

Others express frustration that there is no clear explanation of how the timers work or why they trigger inconsistently.

This sense of being unheard makes the experience more frustrating than the timer itself. A feature like this would be easier to accept if users had transparency around how it functions.

Instead, people exchange theories because no official guidance confirms or disproves anything. The lack of clear communication leaves the community solving these issues among themselves.

The overall sentiment is that the system should evolve with user needs, especially since these timers affect creative expression, emotional writing, and long-form roleplay.

Until users see their feedback acknowledged in a meaningful way, the timer will continue to feel like an unnecessary barrier rather than a protective feature.

Regional differences and platform quirks add to the confusion

Users across different regions report inconsistent access to verification and different timer behavior between the app and the website.

Someone in Sweden still sees the timer active because verification hasn’t rolled out there yet. Several users in the Caribbean report opposite experiences: one has no option to verify at all, while another sees it only on the website and not the app.

These mixed reports show that availability depends heavily on location and platform.

A few people mention that using the website avoids certain issues they face in the app. Others don’t see any improvement and still get timed out.

One comment even suggests that the website brings up an unexpected “scene” screen, adding another layer of confusion. Nothing in the user reports points to a single reliable workaround. Instead, people end up testing both platforms and hoping one behaves better.

Different countries are receiving changes at different times, so two users discussing the same problem may be experiencing different versions of the system.

Someone verified recently while another person in a nearby region still has no access. Since no centralized announcement explains the rollout pace, the community is left comparing notes to figure out what is happening.

All of these scattered experiences make the system feel unpredictable. The core frustration comes from users not knowing whether the timer is tied to their region, their device, their account, or a mix of all three.

With no clarity, the only reference point they have is each other’s reports, which often conflict.

The debate around ID verification and the laws behind the timer

The request for government ID sparked strong reactions. Some users dislike the idea of submitting identification for access to a chatbot. Others understand the reasoning but still find the requirement excessive.

The shared file includes several arguments both for and against the ID requirement. Those who oppose it question why such a document should be necessary for an AI roleplay app.

Those who support it point out that minors were using the platform heavily and often unsafely, which pushed lawmakers to intervene.

Multiple comments reference California’s law as the trigger for these restrictions. Since Character AI is based there, users explain that the company must comply with the state’s rules even if the platform is accessible globally.

Some mention that the law requires apps available to minors to be safe for minors. Character AI responded by limiting minors instead of redesigning the platform.

This created the timer system that adults are now caught in while verification rolls out.

A debate appears in the comments about whether the company could challenge the law. Some users believe companies could sue.

Others point out that you cannot sue a law itself, only the officials enforcing it, and that constitutional challenges are complex and expensive.

Another group argues that the government stepped in because minors were being harmed, and the law is a response to those cases.

These discussions show frustration on all sides. People disagree on whether the law overreaches, whether Character AI handled the situation correctly, and whether the timer is a necessary measure or an unnecessary obstacle.

What they all share is the experience of being affected by a system they did not fully understand and did not ask for.

Community shifts toward alternatives

Repeated long timers push many users to explore other options. One commenter encourages others to stop giving Character AI their time and use different platforms instead.

Another person lists Nectar AI as the tool they rely on.

These suggestions appear because users want uninterrupted conversations without cooldowns blocking them for eighteen to twenty-two hours.

Some believe Character AI still has stronger character awareness than most alternatives.