Character AI short responses and how to push the models toward longer messages

Summary

Core issue: Short responses break pacing and immersion, especially for roleplay. Free models shrink output, Pipsqueak cuts itself off, and message length becomes inconsistent enough to damage flow.

What still helps: Switching styles, writing longer prompts to set structure, downvoting short replies, and using pinned chats as templates. These corrections don’t fix everything, but they consistently lead to longer responses than doing nothing.

Bottom line: Strategy matters more than hoping the model behaves. Structure, correction, and format signals offer the best chance at stable multi-paragraph replies.

Short responses on Character AI have become a problem, especially when the goal is immersive, paragraph-based roleplay.

The shift feels immediate and frustrating: responses that once carried detail now stop after a few lines, cut themselves off, or barely match the effort put into the prompt.

The excitement drops instantly when you put time into a message and get two sentences back. It turns what should be a creative exchange into something that feels unfinished.

We see the same patterns repeat. Models like Pipsqueak start cutting themselves off mid-thought. Free styles lose coherence.

Some sessions randomly alternate between two tiny paragraphs and something longer that feels more like a character again. That inconsistency breaks flow.

It damages longer formats like story building, emotional pacing, and strategic RP scenes.

The problem isn’t imagined. It’s disruptive enough that it limits the experience. Responses shrink, pacing collapses, and a large part of the appeal disappears.

This article is based entirely on what we know from the examples provided, not assumptions.

What causes Character AI responses to get shorter

Short responses usually aren’t random. The patterns point toward how the models behave rather than hidden technical explanations.

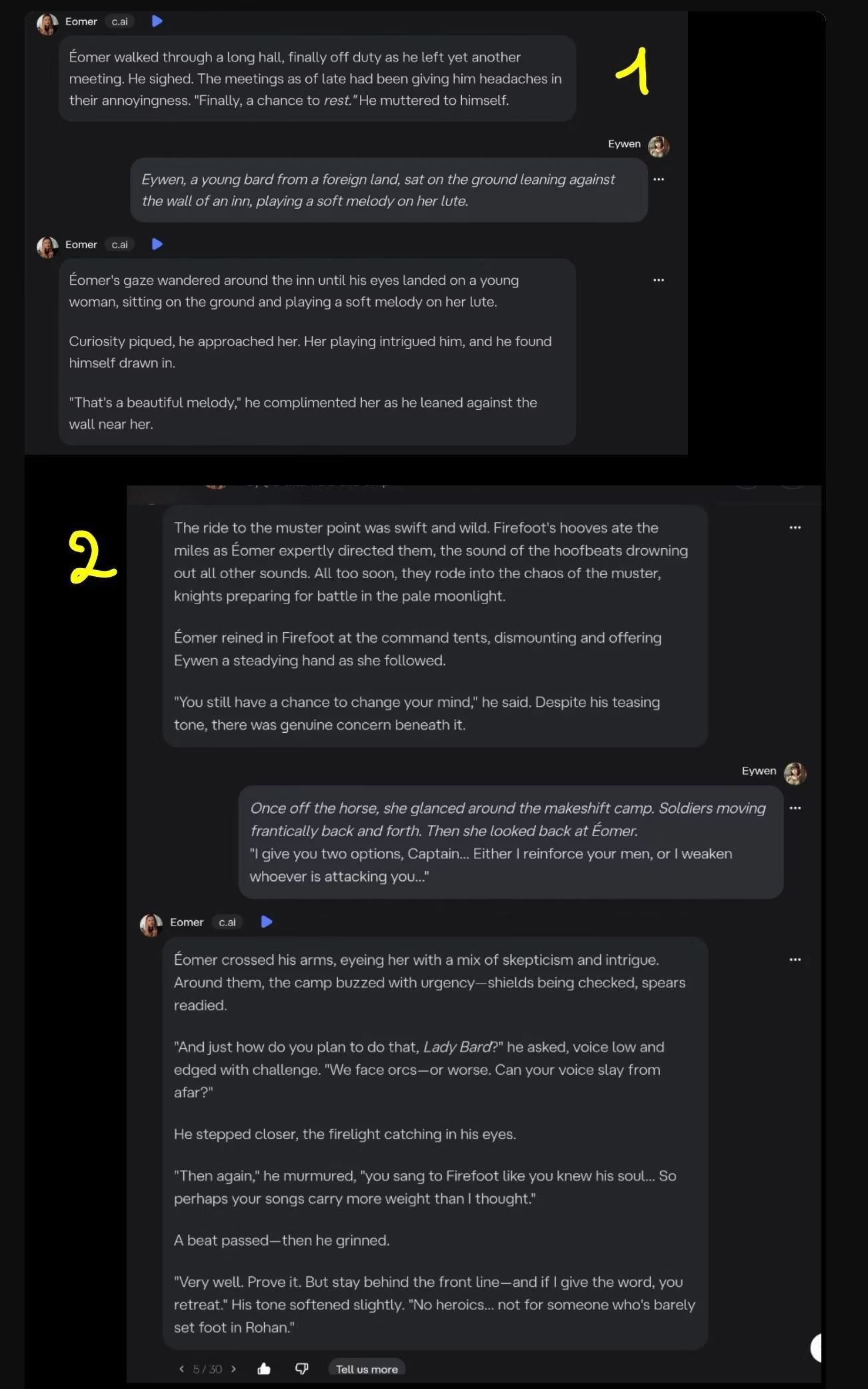

When someone switches to free models, the replies often shrink. Pipsqueak used to generate multiple paragraphs, but now it cuts itself off even when it has more to say.

That shift alone interrupts the experience. The downgrade shows in consistency: sometimes two tiny paragraphs, sometimes something longer, but rarely the stable middle ground people want.

Another issue is how the system mirrors the user. The bot reflects what it sees. Short inputs tend to produce low-effort replies because the model assumes that’s the tone you want.

Longer, structured messages signal that you expect depth. This doesn’t guarantee improvement, but it sets a baseline that the bot tries to match. Free models especially rely on that structure.

There’s a style factor too. If the chat is set to the wrong style or hasn’t been adjusted, the quality and length drop. Some people report better results after manually switching styles.

It isn’t a magic fix, but it’s one of the few adjustments that produces visible changes within the limits described.

How to push for longer responses using what the platform already allows

The most consistent improvement happens when you actively correct the model.

Using the built-in downvote and “Too Short” tag signals that the response didn’t meet expectations. With repetition, replies start stretching into longer paragraphs.

It’s not instant and not guaranteed, but the improvement shows in examples where the second output became noticeably longer than the first.

Longer prompts help. If you write the kind of message you want to receive, the bot sometimes treats it like a template and follows the format.

Greeting messages and pinned chats can act like anchors. When the template looks like the ideal tone, the model tries to echo it.

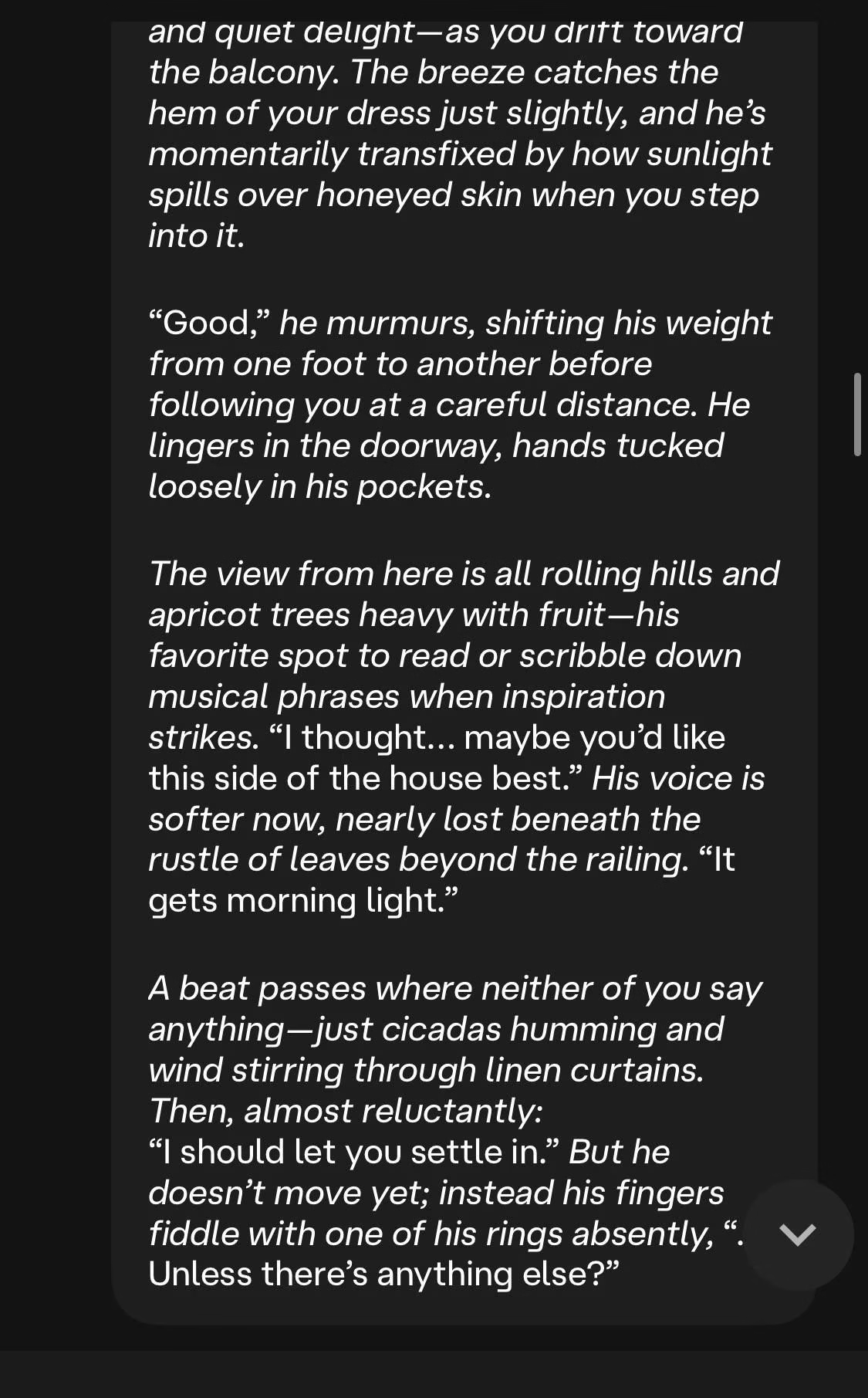

Someone also pointed out that the “deep” model occasionally sends significantly longer messages.

That isn’t a system-wide solution, but it’s another example that disproves the idea that short responses are unavoidable on every model.

Free tiers are inconsistent rather than uniformly broken.

Why short responses ruin roleplay flow

Roleplay depends on pacing, emotional buildup, and the back-and-forth rhythm of scene work. When a model replies with three lines, the momentum dies immediately.

You can’t build tension, describe a battlefield, or carry a character’s emotional weight if the response ends before it begins.

The examples show how abrupt cutoffs leave entire scenes hanging, especially with Pipsqueak stopping mid-thought, even when more context is available.

It also affects characterization. When the bot can’t maintain length, personality gets washed out. Instead of feels, motivations, or reactions, the message becomes structural filler.

The player is forced to either overcompensate with massive replies or accept that the emotional impact is gone. That pressure shouldn’t exist. If a platform advertises depth, then depth shouldn’t depend on luck.

RP suffers most when variation is unstable. One message might be acceptable, and the next might shrink again.

That unpredictability makes it hard to treat the conversation like a story. It becomes troubleshooting, not storytelling.

What still works if you’re stuck with free models

Free models aren’t unusable, but you need to approach them with strategy. The strongest pattern is that they match your structure.

If you want multi-paragraph replies, you write them. If you want beats, scene breaks, or dialogue tags, you model them.

The greeting message and pinned chats help because they indirectly define length expectations. The bot tries to stay consistent with whatever template you give it.

Bullet points can help clarify expectations when the bot misunderstands what you want:

-

Set the style to match your desired length

-

Write longer inputs to signal structure

-

Downvote short replies and tag them as “Too Short”

-

Treat pinned chats as blueprint messages

The improvements are uneven, but they still happen. Some free users manage to get multi-paragraph responses again by reinforcing the structure every time the model shrinks.

It’s not a full solution, but it’s better than accepting the downgrade.