Luma Ray3 reasoning video model explained

Ray3 reasoning video model summary

Luma Ray3 generates native HDR video and critiques its own outputs before delivery. Draft Mode gives fast previews, then you upgrade the best shots to full 4K HDR in minutes.

- Reasoning loop reduces retries and surfaces cleaner takes.

- Visual annotations let you sketch on frames to guide motion and framing.

- Draft to 4K previews in ~20s at lower cost, then quick upgrades to HDR.

- Pro-ready exports slot into editing workflows without color headaches.

AI video just took a sharp turn with the release of Ray3 from Luma. This new model doesn’t just generate footage.

It also evaluates its own outputs, critiques them, and refines them until they meet higher standards. Luma calls it the world’s first reasoning video model, and that claim is backed by some interesting upgrades.

Ray3 creates native HDR video, giving you cinematic detail and color depth. It supports professional editing formats so the footage isn’t locked into a closed system.

The model also introduces annotation controls. You can sketch directly on a frame to guide how the camera should move or how the subject should act in the next shot.

For quick tests, Draft Mode builds rough previews in just 20 seconds at a fraction of the cost, and then you can upgrade the final choice to 4K HDR in minutes.

This reasoning approach matters because it changes the workflow. Instead of sifting through raw outputs, you get a model that can check its own mistakes and offer more usable clips from the start.

That makes AI video more practical for creators who need speed without sacrificing quality.

RoboRhythms.com has tracked many AI releases this year, and Ray3 stands out for bridging reasoning with production-level output.

How Ray3 reasoning changes AI video

Reasoning in Ray3 is not just a technical upgrade. It changes how creators work with AI video.

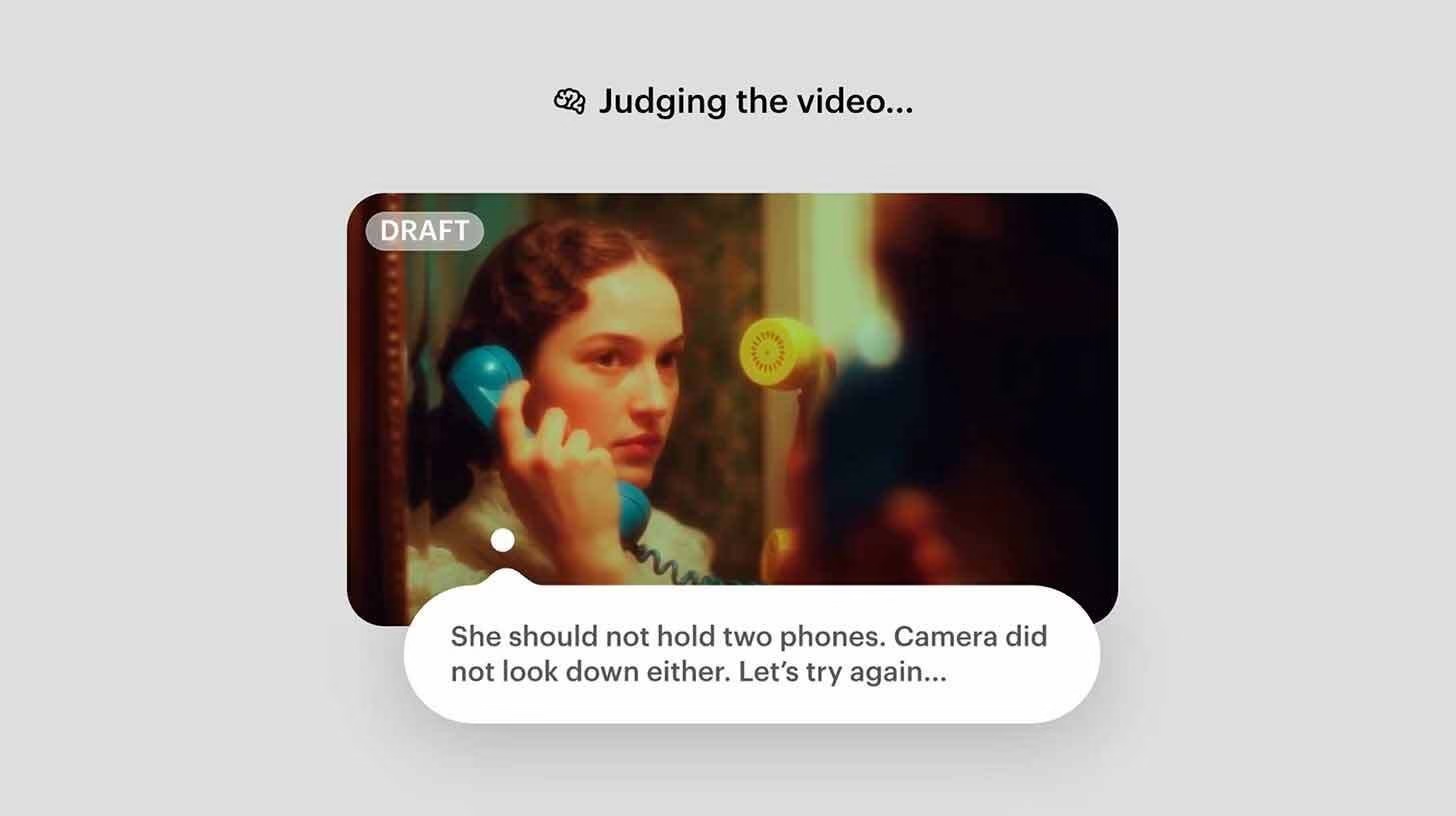

Instead of running multiple prompts and sorting through weak results, the system evaluates its own footage.

It can identify where motion looks unnatural, where colors fail, or where framing is off. That feedback loop means fewer wasted renders and faster access to usable clips.

The annotation feature makes this even more powerful. A creator can sketch an arrow to show how the camera should pan or circle a subject to keep in focus.

The model interprets those marks and adjusts its next render. This workflow feels closer to directing on set than typing abstract prompts.

It opens the door for non-technical users to guide visuals in a more natural way.

Draft Mode reinforces the practical side. By generating previews in 20 seconds, you get a low-cost test before committing resources.

When a take looks good, upgrading it to 4K HDR in under five minutes saves both money and time. That dual mode gives professionals more control over budgets and turnaround.

The end result is a tool that reduces trial and error.

Ray3’s reasoning system makes AI video more predictable, with better alignment between what a user imagines and what appears on screen.

Key features that set Ray3 apart

Ray3 introduces several features that distinguish it from past AI video tools.

These are the ones that matter most to creators:

-

Native HDR output for cinematic color and contrast, compatible with editing workflows.

-

Self-critique and iteration so the model improves results before delivering final footage.

-

Visual annotation controls that let you sketch directly on frames for movement and camera guidance.

-

Draft Mode previews ready in 20 seconds at one-fifth the cost.

-

Fast upgrades to 4K HDR in less than five minutes.

These functions together give Ray3 both flexibility and quality. The HDR foundation ensures visual fidelity while reasoning adds consistency.

Annotation builds a new type of creative control, letting input go beyond text. Draft Mode offers speed, while the upgrade path secures production-ready footage.

No single feature alone explains the impact. It’s the combination that signals where AI video is heading: toward smarter, faster, and more controllable workflows.

Why Ray3 matters for creators

Creators often face a tradeoff between speed and quality. Traditional AI video tools can generate visuals quickly, but the results often require multiple retries.

Ray3 addresses that gap. Its reasoning engine makes each render smarter by evaluating mistakes and correcting them before you see the clip.

That reduces the frustration of wasted time.

For filmmakers and editors, the HDR output means shots slot smoothly into professional pipelines. There’s no need to fix color profiles or battle compression issues.

Teams can integrate Ray3 footage into ongoing projects with less technical adjustment. That saves hours in post-production.

Independent creators benefit as well. Draft Mode previews give them a way to test ideas without high costs.

Small studios or solo artists can explore multiple creative directions and only upgrade the shots they truly need.

That makes AI video more accessible to people outside big-budget production houses.

The annotation tools also change how people collaborate. A director, editor, or even a client can draw notes directly onto frames.

Instead of long emails explaining camera movement, those notes become visual guides that Ray3 interprets instantly.

Practical use cases for Ray3

Ray3 is designed for real-world application, not just tech demos.

Here are some areas where it fits naturally:

-

Commercial production

Brands can generate HDR product shots or lifestyle scenes, with quick previews helping teams align on a direction before investing in final renders. -

Film pre-visualization

Directors can block out shots in Draft Mode to test camera moves, then finalize key sequences in HDR quality to present to producers. -

Music videos

Artists can sketch out concepts and let Ray3 handle experimental visuals. Draft Mode previews allow for fast iteration on abstract or stylized ideas. -

Social content

Creators who need high-quality footage on short timelines can rely on quick previews and targeted upgrades without blowing their budget. -

Client collaboration

Agencies can use annotation features during feedback sessions. Clients sketch their changes directly on a frame, reducing miscommunication.

These use cases show how Ray3 is positioned as more than a novelty.

It can streamline creative processes across industries, especially where time, clarity, and cost control matter.

How Ray3 compares with past AI video models

Earlier AI video models focused on scale and visual appeal but lacked refinement. They could generate impressive-looking scenes, yet the results often came with flaws in motion or lighting.

Users had to run several generations, manually select the best one, and then patch errors in editing.

Ray3 shifts that dynamic. Its built-in reasoning system reduces the need for external correction. The model critiques itself and iterates before outputting the final video.

That makes the workflow more reliable and reduces the time between idea and production.

The Draft Mode also sets Ray3 apart from competitors. Other models force users to render at full quality every time, which increases cost and slows testing.

With Ray3, creators can preview multiple directions cheaply and only upgrade what works. That feature alone makes it more suited for professional and budget-conscious users.

Annotation controls are another leap. Past tools depended only on text prompts, which limited creative precision.

By letting users sketch on frames, Ray3 bridges the gap between imagination and output in a way other models have not.