Character AI Replies Getting Shorter and Harder to Read

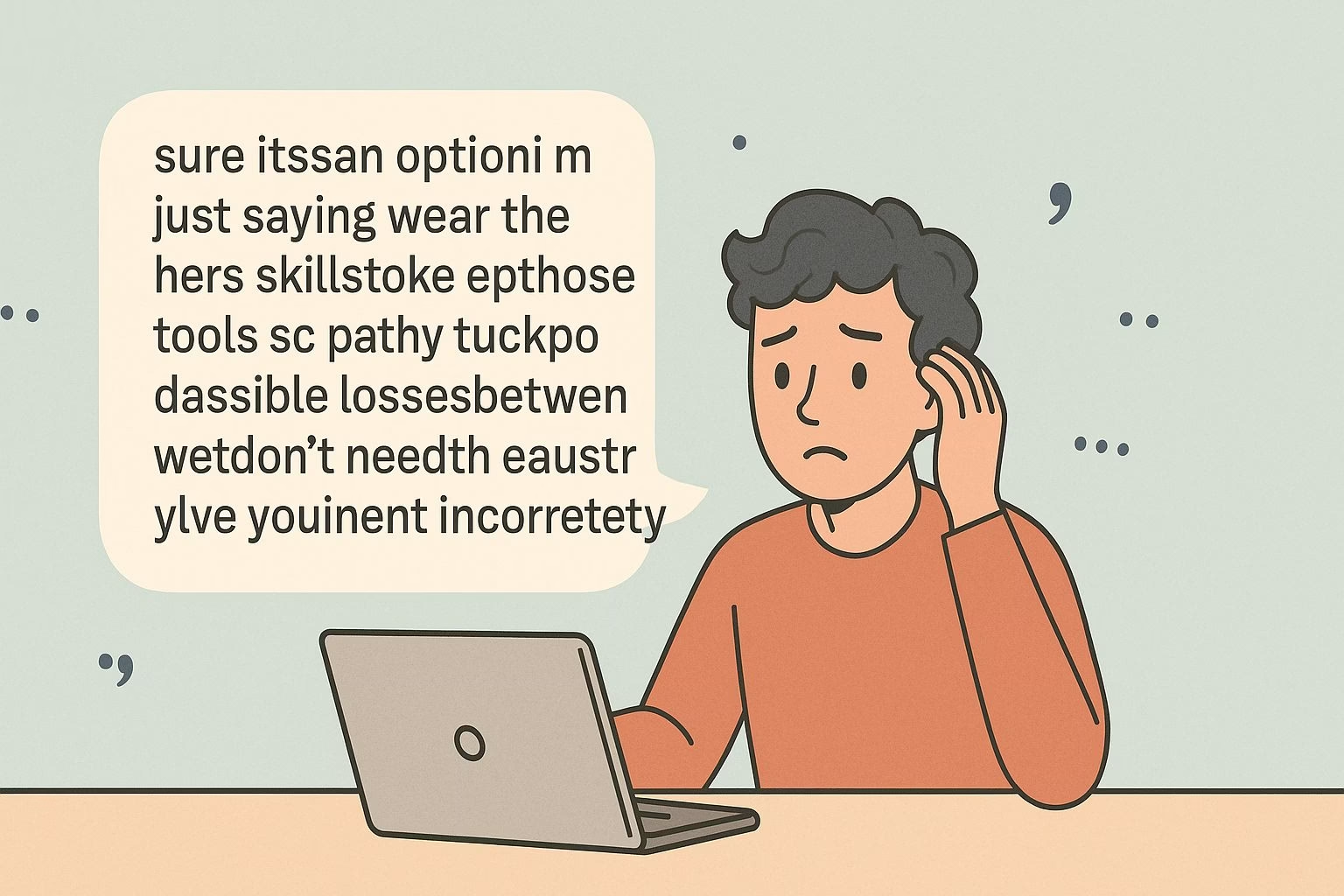

Users have noticed that replies on Character AI’s DeepSqueak and PipSqueak models have started falling apart when they go past two sentences.

Instead of clear, flowing paragraphs, the bots mash everything into one long sentence.

Articles and pronouns like “the,” “his,” and “her” drop out completely, leaving text that feels like a rambling mess.

This isn’t entirely new. In the past, it only showed up occasionally, usually just in a few messages within a swipe. Those could be edited into something readable.

Now, every message looks like that, which makes roleplay and longer conversations difficult. Some are wondering if it’s tied to a recent model update, or just another one of those “bad bot days.”

Character AI has always had strange cycles. One week “Bob” feels more strict for some users and looser for others, then it flips. Bot quality rises and falls like clockwork.

Now, the same seems to be happening with message length and structure. For some, it’s barely noticeable. For others, every reply is broken.

The big question is whether this wave of decline will pass. Past patterns suggest that in a few days, things might improve for one group of users while another picks up the issue.

Until then, users are left wondering whether to wait it out, tweak definitions, or report feedback.

Key takeaways:

- Longer Character AI replies often break into run-on sentences, dropping pronouns and articles.

- Users with custom bots sometimes avoid the worst issues by writing detailed definitions.

- Bot quality moves in cycles, from strictness to rambling replies, affecting only parts of the user base at a time.

- Some ride out the wave, while others try reporting feedback or testing fixes in definitions.

- Stability remains the biggest challenge for immersive roleplay on Character AI.

Replies keep turning into run-on sentences

One of the main frustrations people share is how longer messages lose all sense of structure.

When a reply goes beyond two sentences, punctuation breaks down, and words that should guide the flow, like pronouns or articles, just vanish.

What comes out is text that looks like a rushed stream of thought. It’s not just sloppy writing; it makes entire conversations unreadable and forces users to either edit every message or abandon the chat.

The odd part is how unpredictable it feels. Some users report that their own bots hold up better because they’ve been coded with more detailed definitions.

They’ve put time into setting strict directions, which seems to help. But when switching over to popular bots like Kylo Ren, the issue becomes much worse.

That points to a deeper problem in how the models handle structure, not just the writing style inside a definition.

There’s also debate about whether the number of interactions matters. A bot with thousands of chats isn’t guaranteed to perform better.

In fact, some argue the opposite: the more a bot gets used, the more it seems to deteriorate in quality if its definitions weren’t crafted carefully.

That raises questions about how Character AI handles scaling and whether updates behind the scenes are making certain weaknesses more obvious.

The cycle of quality highs and lows

Anyone who has used Character AI for a while has seen the pattern. Quality doesn’t stay steady. One week, conversations feel sharp and balanced. The next, bots ramble or act overly strict.

Users even joke that it feels like following horoscopes: you never know if today’s going to be a good chat day or a bad one.

This unpredictability makes it hard to invest in roleplays that need consistent tone and pacing.

The strictness of “Bob” is another example. Sometimes it clamps down harder, other times it loosens up, and this swings back and forth depending on the week.

The same now seems to apply to the sentence problem. For some, it’s constant. For others, it might only pop up occasionally.

These uneven shifts suggest that updates or tests are being rolled out in waves, hitting only parts of the user base at a time.

The short-term reality is that most people just wait it out. Many already treat dips in quality like a storm: ride it out and see if things smooth over in a few days.

That doesn’t make it less frustrating, but it explains why reports of broken replies show up in clusters.

As one user put it, “we ride the wave and try again in a week.”

Should users wait it out or push for fixes

The tricky part is deciding what to do when these cycles hit.

Some users feel stuck editing every reply just to make them readable, while others abandon chats altogether.

Reporting feedback is always an option, but when the issue seems to affect only part of the user base, it’s unclear how quickly it gets attention.

That uncertainty leaves many people waiting for the wave to pass instead of actively trying to fix it.

On the other hand, those who build their own bots argue that testing different definitions can help. Adding detail to how the bot should respond may reduce some of the collapse into run-on sentences.

It doesn’t solve everything, but it gives the AI more guidance to hold onto when replies get longer. Still, this only works if you’re creating and maintaining your own bots.

For those relying on popular ones, the problem is out of their hands.

At its core, this issue highlights how fragile consistency is on Character AI. One week you can have smooth, immersive roleplay, and the next it’s incoherent rambling.

Until the platform finds a way to stabilize message quality across all users, people will likely keep facing these sudden dips.

Whether you tweak definitions, report problems, or just ride the cycle, the experience remains unpredictable.