Alibaba Qwen Releases Powerful Open-Source Image Editing Model

Alibaba’s Qwen team has just released Qwen-Image-Edit, a 20B parameter open-source model built for advanced image editing.

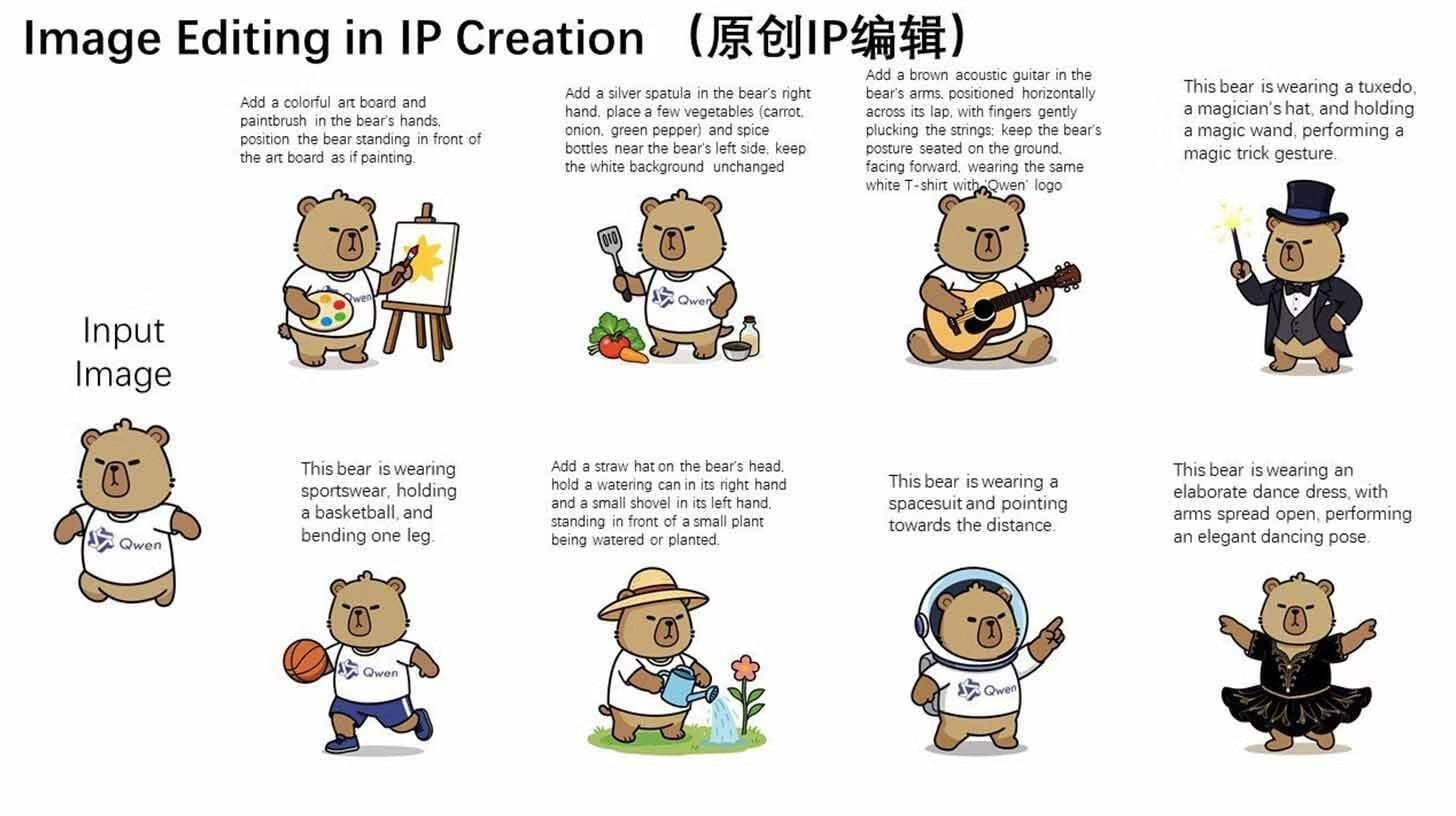

Unlike most AI tools that focus only on generation, this one is designed to handle both pixel-perfect edits and full style transformations while preserving the integrity of objects and characters in the image.

The model takes a structured approach to editing. It separates tasks like rotating objects or applying style transfers from more precise adjustments to specific areas of an image.

This makes it easier for users to stack multiple edits together without restarting from scratch.

It also comes with built-in bilingual support, allowing direct modification of both Chinese and English text in images without breaking fonts, formatting, or sizing.

Performance benchmarks show that Qwen-Image-Edit outperforms existing solutions like Seedream, GPT Image, and FLUX.

With features like granular text control, complex edit stacking, and strong benchmark results, it represents one of the first serious steps toward AI systems that excel at editing rather than only generating.

As the model is open-sourced, developers and researchers can begin testing and adapting it right away.

With the hype around Qwen’s “nano-banana” model also spreading through LM Arena, it feels like we are entering a stage where natural language editing of images is no longer a futuristic idea but an accessible reality.

How Qwen-Image-Edit Works

Qwen-Image-Edit is designed around two main editing tracks. The first handles broad transformations, such as rotating objects, changing colors, or shifting the entire style of an image.

The second focuses on targeted edits where users want to modify a specific area while leaving the rest untouched.

This split makes the editing process smoother because it allows users to choose the right tool for the job without disrupting the whole image.

Another standout feature is its approach to text editing. Instead of breaking fonts, sizes, or formatting, the model can directly alter both Chinese and English text inside images.

This matters because most image editors either ignore text or force users to replace it manually. Qwen-Image-Edit keeps the layout intact, which is especially useful in cases like product packaging, posters, or memes where typography is part of the design.

The model also supports stacked edits. Users can perform one change, then another, and keep layering without starting over.

For example, you could rotate an object, shift its color, and then adjust the background while keeping everything consistent.

This workflow makes complex edits less frustrating and more manageable, especially when compared to older models that struggled with retaining image quality after multiple modifications.

Where It Stands Against Rivals

Benchmarks show Qwen-Image-Edit performing ahead of other tools such as Seedream, GPT Image, and FLUX.

These comparisons matter because each of those models has been praised for strong editing in specific areas, yet they often falter in consistency or complexity.

Qwen-Image-Edit delivers sharper results across different benchmarks, especially when multiple edits are stacked.

Another advantage is its open-source release. Seedream and FLUX are not as easy to experiment with, which limits how much the wider community can contribute to testing or building on top of them.

Qwen’s decision to make Image-Edit public means researchers and developers can stress-test it quickly and push the boundaries of what the model can handle.

The bilingual text editing also sets it apart. GPT Image and others can generate text, but they rarely manage proper formatting when edits are required.

Qwen-Image-Edit is one of the first to treat typography with the same care as objects or styles. This detail makes it a practical tool rather than just a technical showcase.

Qwen-Image-Edit vs Other Image Editing Models

| Feature | Qwen-Image-Edit | Seedream | GPT Image | FLUX |

|---|---|---|---|---|

| Edit Types | Pixel-perfect edits and style transformations with object/character integrity | Strong style transfer, less precise object handling | General edits but inconsistent retention | Focused edits, struggles with complex layering |

| Text Editing | Direct bilingual edits (Chinese & English) with formatting preserved | Limited text handling | Generates text but often breaks fonts/layout | Weak text editing support |

| Stacked Edits | Supports multiple edits without restart | Struggles with layered edits | Degrades quality after multiple changes | Inconsistent results after stacking |

| Open Source | Yes, fully open-source link | No | No | Limited access |

| Benchmark Performance | Outperforms rivals in SOTA tests | Good in narrow cases | Average | Strong in select benchmarks but less versatile |

Why This Release Matters for AI Editing

Most AI tools until now have been focused on generating new images rather than refining existing ones.

Qwen-Image-Edit changes that balance by showing that edits can be as reliable and controlled as first-generation outputs.

This shift is important because in many real-world scenarios, people don’t want to start from scratch. They want to keep the base image intact and make targeted adjustments without losing quality.

Another reason this release stands out is accessibility. By making the model open-source, Alibaba has ensured that it won’t just remain an internal experiment.

Developers, researchers, and even hobbyists can test it, report weaknesses, and build applications around it. This creates a faster loop of improvement compared to closed systems.

It also highlights a trend: as models grow more capable, users will demand finer control instead of just flashy outputs.

Editing features like bilingual text modification or clean stacking of edits are small details on paper, but they show that the focus is moving toward practical usability.

This marks a shift in how AI models are judged, no longer just on how striking their generations look, but on how well they handle complex, detailed workflows.

Practical Use Cases of Qwen-Image-Edit

The ability to make edits without breaking the entire image opens up many possibilities. Designers can update product labels or posters without redoing the full layout.

Marketing teams can localize campaigns by switching between Chinese and English text while keeping the design consistent.

Content creators can adjust colors, angles, or backgrounds on the fly without having to rebuild from scratch.

For smaller teams or individuals, the model reduces the need for heavy editing software.

Someone making social media graphics could swap out text, alter object placement, or apply style changes directly through natural language prompts.

This lowers the barrier to entry for high-quality visual work.

Even technical fields can benefit. Engineers working on simulations or presentations could use it to refine diagrams. Teachers could adapt learning materials for different audiences.

The fact that edits can stack means users can fine-tune images over time instead of relying on one perfect prompt.

This level of flexibility is what makes Qwen-Image-Edit more than just another research project,it feels like a tool people could actually build into their workflows.

Closing Thoughts

Qwen-Image-Edit represents a clear step forward in AI editing. Instead of focusing on flashy generations, it delivers tools that make controlled, detailed changes possible.

The ability to edit text in two languages, preserve design integrity, and stack multiple edits without degrading quality sets it apart from what came before.

What makes this release even more important is its open-source availability.

By giving the community direct access, Alibaba has ensured that the model will be tested in real workflows rather than confined to lab results.

As a result, we can expect rapid improvements, creative applications, and real adoption by designers, creators, and developers.

With strong benchmark results and features that solve everyday editing challenges, Qwen-Image-Edit shows that editing is becoming as much a focus as generation.

For anyone watching the growth of AI in creative work, this is a model worth keeping an eye on.